Welcome to our Annual Report

As we look back on the year, we are reminded of the importance of our mission and the impact of our work. The Norwegian research infrastructure services (NRIS) is a collaboration between Sigma2 and the universities of Bergen (UiB), Oslo (UiO), Tromsø (UiT The Arctic University of Norway) and NTNU to provide national supercomputing and data storage services. These services are operated by NRIS and coordinated and managed by Sigma2.

As we reflect on the past year and look forward to the future, we remain committed to our mission of providing accessible, advanced technology to the research community. We invite you to explore our report and learn more about our achievements in 2023.

Content

- Managing Director`s corner

- Insights from the board room

- Paving the way for AI with supercomputing

- Graphic processing units (GPUs) are catalysts for AI research

- The researchers who are using the national services

- Our services in 2023

- Fast-tracking the digital transformation of industry

- Collaborations out and beyond

- Behind the curtain — Meet the team

1. Managing Director`s corner

It is a great pleasure to present our Annual Report for 2023.

We are constantly working to fulfill our values with particular attention to openness, customer and user focus and societal responsibility.

As part of our societal responsibility, we strive to utilise the national resources in the most appropriate way possible. In this, our Resource Allocation Committee (RFK) who allocates our resources to the most eligible science projects is of utmost importance. I would thus like to express my heartfelt gratitude to the RFK for another year of diligent work.

Our users are at the heart of what we do, and we are delighted by the positive feedback from our annual user survey. The high satisfaction expressed regarding the services and support received from NRIS, our collaborative initiative with university partners, is truly rewarding.

This past year, we underwent a challenging process of renegotiating our collaboration agreement due to changing priorities and reduced university funding, which also led to a reduction in funding for Sigma2 from 100 MNOK to 53 MNOK. The Research Council maintains their funding at the same level as before (50 MNOK).

Despite these challenges, a significant positive outcome from the renegotiation was the strong support for Sigma2 and the national e-infrastructure for HPC and large storage from the universities` top management. We have also established a "Partnership Meeting" with top management representatives from the partner universities, to set the ambition level and secure the well-being of the collaboration.

We have taken a small but significant step forward with the introduction of a new Gender Equality Plan and the initiation of a general sustainability activity.

Many of the research projects utilising our e-infrastructure are focused on sustainability. In this report, you can read about how our largest user in terms of consumed CPU-hours is accelerating the use of hydrogen as fuel.

Another project I hope you will find interesting is how chemistry researchers are utilising Artificial Intelligence (AI). This leads us to a significant trend that impacted Sigma2 in 2023: the rise of Artificial Intelligence and Machine Learning (ML). In this year's report, we have therefore spotlighted activities and research related to AI.

We have established a very good starting point for researchers interested in exploring AI, extending up to those working on large language models (LLM).

Sigma2 together with our university partners in NRIS is well positioned to handle the expected increase in demand for AI resources now, despite concerns about potential hardware resource shortages.

The National Competence Centre is also deeply involved in AI activities and in 2023 had its first big involvement with the public sector. More about this below. Happy reading!

2. Insights from the board room: Reflections on last year and visions of the future.

The emergence of advanced language models is expanding the applications of HPC, broadening its usage beyond what we see today."

Tom Røtting, Chair of the Sigma2 Board.

3. Paving the way for AI with supercomputing

Access to supercomputing and large-scale data handling is becoming increasingly crucial for digital transformation across almost all sectors. Maintaining supercomputers nationally is advantageous to Norway as a research nation. Not only is the procurement of required infrastructure and equipment expensive, but the systems are also technically demanding for any researcher to utilise. This approach enables comprehensive services and expert support to all researchers, fostering collaboration across our research institutions.

However, the seamless integration of supercomputing and large-scale data handling into our current research landscape hasn't happened overnight. It's the outcome of years of tireless work and dedication of all partners in NRIS. The journey up to this point, where access to supercomputing is efficient, cost-saving and fairly awarded based on scientific quality, has been long and challenging. But it's a journey that has undoubtedly been worth it, as we now stand at the forefront of a digital transformation reliant on our services.

HPC and storage, key players in the development of large language models

2023 saw a race to provide the best language models, making artificial intelligence common property. Large Language Models (LLMs) and similar advanced AI models are using vast data sets and an almost unlimited need for computing power in training. The processing power and data storage capacity needed often exceed what a single institution or university can provide in-house.

During 2023, we experienced a significant surge in demand for cloud services running on the NIRD Service Platform. Also, our annual user survey picked up an overwhelming 230 % increase in the demand for the Service Platform. This surge is largely driven by AI workloads – particularly in the areas of Natural Language Processing (NLP) and Large Language Models.

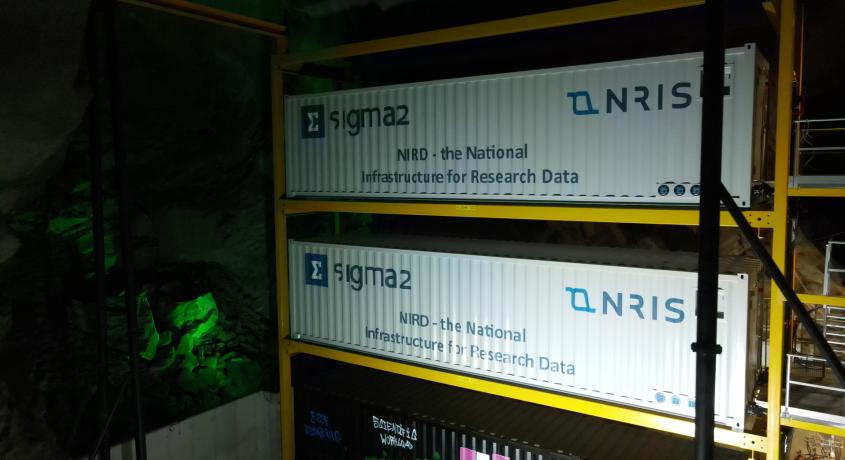

Therefore, it was good news when we in November successfully consolidated and relocated the hardware for cloud services in Tromsø and Trondheim to Lefdal Mine Datacenter (LMD) on the Norwegian West Coast. This hardware is now seamlessly integrated with the NIRD Service Platform, now boasting an impressive 2,368 vCPU-cores, 9 TiB RAM, and a whopping 3.3 PFLOPS (32-bit) of deep learning performance. This upgrade will significantly enhance our ability to support the ever-growing need for artificial intelligence, machine learning (ML) and deep learning (DL) workflows.

The AI collaboration that will benefit Norway

The technology currently being adopted for AI closely aligns with HPC, and these infrastructures naturally complement each other. This is also in line with the recommendations in the Multiannual Strategy Plan from EuroHPC. Therefore, Sigma2 is proud to participate in the Norwegian AI Cloud (NAIC) initiative. Launched in the autumn of 2023, this project aims to gather and share AI resources for research and society efficiently.

While AI thrives in academia and larger organisations, smaller communities and startups often face resource challenges. NAIC is set to address this by becoming Norway's leading AI infrastructure, offering computational resources, data-sharing opportunities, and fostering expertise development across sectors.

NAIC will develop services and skills that will soon become part of the national e-infrastructure. We are very happy to be a part of this collaboration, alongside the University of Oslo (UiO) (Project Leader), the University of Bergen (UiB), NTNU, UiT The Arctic University of Norway, the University of Agder (UiA), Simula Research Laboratory and NORCE Norwegian Research Centre. The project is funded by the Norwegian Research Council.

Take a journey through the history of the Norwegian e-infrastructure

3 Large Language Models trained in just weeks.

4. Graphic processing units (GPUs) are catalysts for AI research

With the growing demand for AI resources from Norwegian scientists, we are steadily working towards increasing the amount of available AI capacity in the form of Graphic Processing Units (GPUs). Access to GPUs is crucial for those working in AI and machine learning. The GPUs dramatically increase the speed of computations, and modern AI tools have built-in support for GPUs, which can significantly boost performance compared to a standard processor.

Until the past year, Sigma2 has not experienced significant demand for computational power for artificial intelligence or GPUs. For several years, Sigma2 has provided GPUs, initially on the NIRD Service Platform and eventually on traditional computing facilities.

The two major providers of GPUs are AMD and NVIDIA, with NVIDIA being the largest and offering a more comprehensive development environment and software suite. By the end of 2023, supercomputer Betzy had 16 NVIDIA A100/40GB, and Saga had 32 NVIDIA A100/80GB and 32 NVIDIA P100). The largest proportion of our GPUs are on LUMI (240 AMD MX250X). However, some users encounter difficulties using LUMI's AMD GPUs. This is primarily because code written for NVIDIA doesn't always run automatically or, in some cases, not at all without modifications.

In addition to the currently available GPUs, we are well underway with the procurement process to secure Norway's next national supercomputer. Here, we expect much of the computing power to come from GPUs.

A dedicated team of GPU experts

In the NRIS organisation, we have a dedicated team of experts who guide our users in optimising high-performance computing (HPC) and Artificial Intelligence (AI) applications by leveraging the power of GPUs. This optimisation allows researchers to effectively use large HPC systems like the supercomputer LUMI, significantly reducing computing time from weeks to mere days. This efficiency enhances scientific advancements. The team's responsibilities range from enhancing code for GPU accelerators to disseminating GPU-related activities.

A year of diverse projects and technological advancements for the GPU team

In 2023, the GPU team participated in several projects within the HPC and AI domains. Their involvement ranged from implementing GPU-programming models like CUDA/HIP in combination with MPI, porting applications from the NVIDIA-GPU-framework (CUDA) to the AMD-GPU framework (HIP), to incorporating distributed training in Deep Learning (DL) applications using the Horovod framework.

One notable accomplishment of the GPU team is the porting of the ISCE2 code - a framework developed for processing radar data, funded by NASA. This achievement was accomplished in collaboration with researcher Zhenming Wu from the University of Tromsø, who is currently affiliated with SNF (Samfunns- og næringslivsforskning AS). The GPU team's contribution enables Zhenming to fully utilise the capacity of LUMI-G.

"It has been a wonderful experience working as a team, and collaborating with you has been a pleasure."

Zhenmig Wu, UiT The Arctic University of Norway

Another compelling milestone for the GPU team is related to implementing a distributed version of a multimodal learning model application. This enabled Assistant Professor Rogelio Mancisidor at the Department of Data Science and Analytics at BI Norwegian Business School, to train large models containing millions of trainable parameters and extensive datasets in a short amount of time.

Navigating the AI times ahead

As AI technology continues to progress, and the volume of data expands, utilising AI to address scientific challenges requires the deployment of large DL models and training substantial datasets. Such a process would be barely possible on a single GPU-based server.

— Here, the GPU team will play an important role in helping researchers accelerate their AI applications for large-scale computations, says Hicham Agenuy, the leader of the GPU Team, commenting on the future outlook of AI.

GPUs on the agenda

Meeting researchers` future demand for HPC and GPUs

5. The researchers who are using the national services

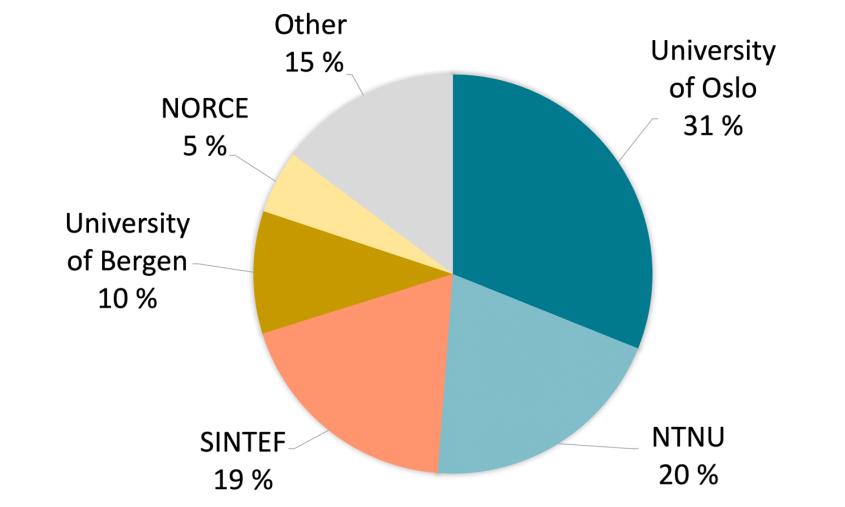

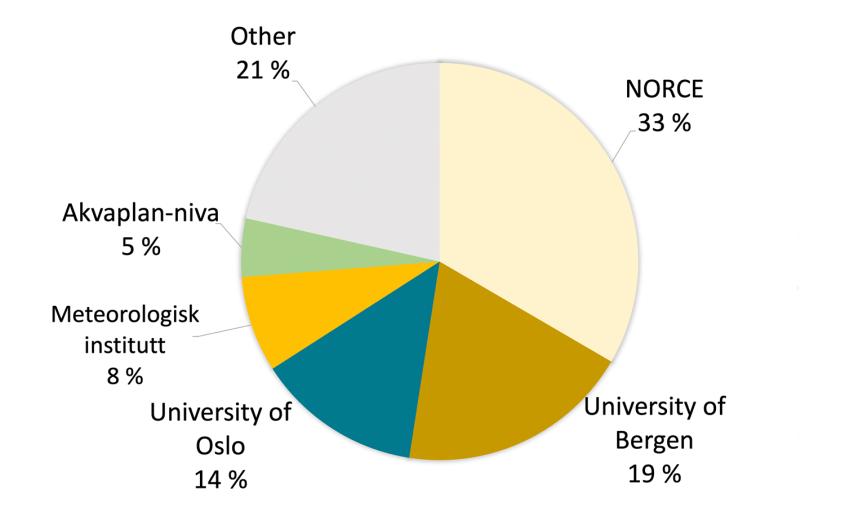

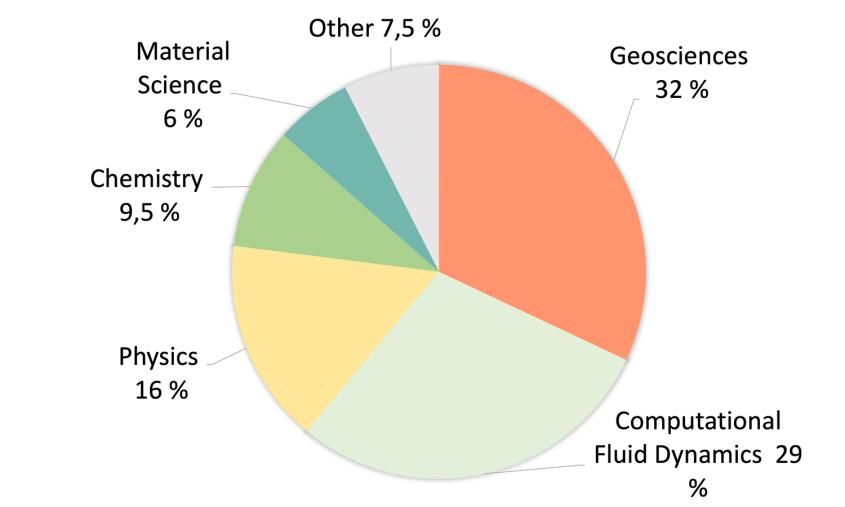

The continuous advancements in technology and digital methodologies necessitate the increasing demand for high-performance computing and storage services across various scientific fields for research purposes. In the year 2023, more than 650 projects and nearly 2,400 users utilised the national systems, collectively receiving 1,410.4 billion CPU hours and over 31.8 petabytes of storage capacity.

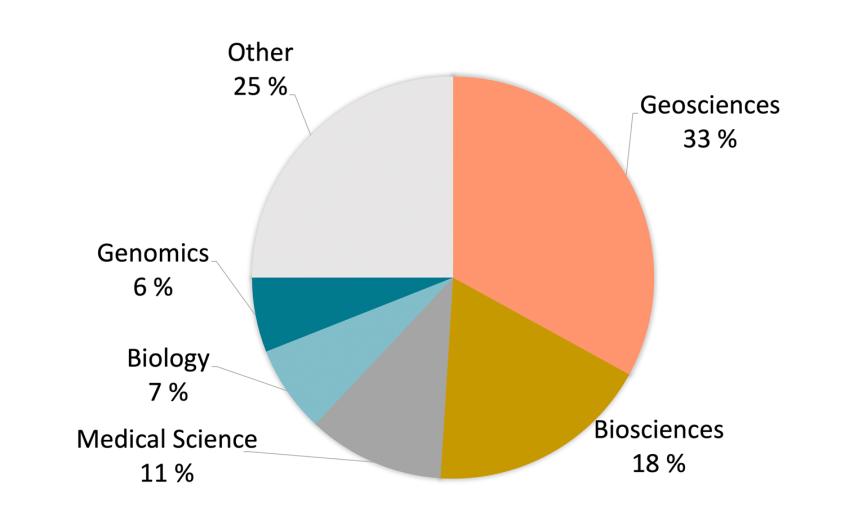

Geosciences, as in previous years, remains the primary user of computing resources (down 2 % since 2022) while we also see an increase in computational fluid dynamics research (up 7 % from 2022). Climate research leads in terms of storage requirements.

Additionally, diagrams representing the largest users by scientific field and institution are also included below.

Top users by institution and field of science

Top 5 HPC projects by awarded CPU hours in 2023

1. Combustion of hydrogen blends in gas turbines at reheat conditions

Research into Carbon Capture and Storage (CCS) for fossil fuel-based power generation is crucial in mitigating climate change. With modern gas turbines (GTs) finely tuned for conventional fuel mixtures, CCS introduces challenges due to variations in fuel and operating conditions.

To address this, high-resolution computational fluid dynamics is used to study hydrogen-blend combustion in gas turbines, aiming to understand thermo-acoustic behaviour in staged combustion chambers. Utilising Sandia NL's S3D code and supercomputing resources, this research seeks to ensure stable and safe gas turbine operation, contributing to climate change mitigation efforts.

- Project leader: Andrea Gruber

- Institution: SINTEF Energi AS

This project was awarded 190 million CPU hours.

2. Solar Atmospheric modelling

The Sun's impact on Earth is crucial, affecting human health, technology, and critical infrastructures. Solar magnetism is central to understanding the Sun's magnetic field, its activity cycle, and its effects on our environment.

Mats Carlsson and his fellow researchers are at the forefront of modelling the Sun's atmosphere with the Bifrost code, aiding the comprehension of the Sun's outer magnetic atmosphere, which influences phenomena like solar wind, satellites and climate patterns.

- Project leader: Mats Carlsson

- Institution: Rosseland Centre for Solar Physics, UiO

This project was awarded 167 million CPU hours.

3. Quantum chemical studies of molecular properties and energetics of large and complex systems

The Hylleraas Centre for Quantum Molecular Sciences employs advanced computational chemistry codes to simulate complex molecular systems interacting with fields and radiation. Their mission is to comprehend and predict new chemistry, physics, and biology in molecules under extreme conditions and electromagnetic fields, employing cutting-edge computational methods and parallel computing resources.

- Project leader: Thomas Bondo Pedersen

- Institution: The Hylleraas Centre for Quantum Molecular Sciences (UiO)

This project was awarded 95 million CPU hours.

4. Heat and Mass Transfer in Turbulent Suspensions

This project focuses on performing detailed simulations of heat and mass transfer in two-phase systems, involving dilute suspensions of particles or bubbles in gas or liquid flows. These systems are common in both natural and technological settings.

Building upon advancements in flow simulation algorithms, particularly in the areas of turbulent heat transfer and droplet evaporation, the project seeks to explore the added complexity introduced by phase-change thermodynamics. This complexity includes variable droplet size and phenomena like film boiling, marking a significant advancement from previous studies.

- Project leader: Luca Brandt

- Institution: Department of Energy and Process Engineering, NTNU

This project was awarded 94 million CPU hours.

5. Bjerknes Climate Prediction Unit

The Bjerknes Climate Prediction Unit focuses on developing prediction models to address climate and weather-related challenges. These include predicting precipitation for hydroelectric power, sea surface temperatures for fisheries, and sea-ice conditions for the shipping industry.

Bridging the gap between short-term weather forecasts and long-term climate projections, their goal is to create a highly accurate prediction system for northern climate. This involves understanding climate variability, developing data assimilation methods, and exploring the limits of predictability.

- Project leader: Noel Sebastian Keenlyside

- Institution: Geophysical Institute, UiB

This project was awarded 92 CPU hours.

Top 5 storage projects by allocated storage in 2023

1. High-Performance Language Technologies

This timely project addresses the rapid advancements in Natural Language Processing (NLP) and its strong presence in Norwegian universities.

It focuses on countering the dominance of major American and Chinese tech companies in training Very Large Language Models (VLLMs) and Machine Translation (MT) systems, which have wide applications.

To foster diversity, the project aims to lower entry barriers by establishing a European language data space, facilitating data gathering, computation, and reproducibility for universities and industries to develop free language and translation models across European languages and beyond.

- Project leader: Stephan Oepen

- Institution: Department of Informatics, UiO

4,398 terabytes (TB) of allocated storage.

2. Storage for nationally coordinated NorESM experiments

The Norwegian climate research community has developed and maintained the Norwegian Earth System Model (NorESM) since 2007. NorESM has been instrumental in international climate assessments, including the 5th IPCC report through CMIP5.

The consortium, comprising multiple research institutions, is dedicated to contributing to the next phase, CMIP6, in partnership with global modelling centres. This collaborative effort seeks to advance our knowledge of climate change and provide accessible data to scientists, policymakers and the public.

- Project leader: Mats Bentsen

- Institution: NORCE Norwegian Research Centre AS

1,979 TB of allocated storage.

3. Bjerknes Climate Prediction Unit

The Bjerknes Climate Prediction Unit focuses on developing advanced prediction models to address climate and weather-related challenges, such as predicting precipitation for hydroelectric power, sea surface temperatures for fisheries, and sea-ice conditions for shipping.

They aim to bridge the gap between short-term weather forecasts and long-term climate projections by assimilating real-world weather data into climate models. This project faces three key challenges: understanding predictability mechanisms, developing data assimilation methods, and assessing climate predictability limits.

- Project leader: Noel Keenlyside

- Institution: Geophysical Institute, UiB

1,539 TB of allocated storage.

4. Storage for INES — Infrastructure for Norwegian Earth System modelling

The INES project, led by Norwegian climate research institutions, maintains and enhances the Norwegian Earth System Model (NorESM). Its goals include providing a cutting-edge Earth System Model, efficient simulation infrastructure, and compatibility with international climate data standards.

The Norwegian Climate Modeling Consortium plans to increase contributions for further development and alignment with research objectives.

- Project leader: Terje Koren Berntsen

- Institution: Department of Geosciences, UiO

1,319 TB of allocated storage.

5. High-latitude coastal circulation modelling

This project, managed by Akvaplan-niva, encompasses physical oceanographic modelling activities, covering coastal ocean circulation modelling in the Arctic, Antarctic, and along the Norwegian coast.

These activities involve storing significant amounts of data, including high-resolution coastal models and atmospheric model output needed to force the ocean circulation models. To achieve high resolution in various regions, we must store substantial model data and results from specific simulations.

- Project leader: Magnus Drivdal

- Institution: Akvaplan-niva AS

990 TB of allocated storage.

We enhance excellent research for a better world: A selection of highlighted projects

In today's world, nearly every aspect of life is influenced by technology. We rely on scientific and technological advancements to help the urgent issue of climate change and pave the way for a sustainable, green future for ourselves and future generations.

This requires the integration of insights from various scientific disciplines with innovative research and technologies.

We thus take substantial pride in the impactful research facilitated by our national e-infrastructure services. We are confident these research projects will produce new and invaluable scientific insights.

We hope you enjoy this selection of highlighted use cases we have prepared for you.

6. Our services in 2023

We are working continuously to improve our services to ensure that we meet Norwegian scientists' requirements and demands. If you click the headings below, you can read more about services development in 2023.

High-Performance Computing (HPC)

2023 was another year of continued operation for HPC. Although no new systems were set in operation, the work-horses Betzy, Fram and Saga satisfied the demand for computing resources.

The oldest system, Fram, has been in high demand and has delivered CPU resources to a diverse community.

The LUMI service also has seen considerable progress during the last year. The integration with the national accounting system has been improved, and we see a steady increase in demand for LUMI’s GPU resources. LUMI is de facto a GPU system (95% of the capacity), and building a research load on a new GPU architecture takes time.

Although still in high demand, Saga’s P100 GPUs are approaching their end of life. To upgrade the GPU offering, 32 new A100 GPUs were added to the system during the autumn, and the load can then be phased over from the P100s to the A100s.

The RedHat and CentOS distributions are providing our operating system services, and the recently announced end of life of the current major versions mandated major maintenance breaks for OS upgrades of Fram and Saga last year. Upgrading the major OS is a large undertaking, but both upgrades were completed without significant incidents. Betzy must be upgraded during 2024.

No systems last forever, and being the oldest mate, the Fram system eventually has to be replaced. Finally, in December the invitation to tender for Fram’s replacement was announced, with the expectation to replace Fram in 2025.

Course Resources as a Services (CRaaS)

Course Resources as a Service (CRaaS) assists researchers in facilitating courses or workshops with easy access to national e-infrastructure resources, used for educational purposes.

Projects utlising the CRaaS-service during 2023 have spanned from the fields of physics, biotechnology, as well as GPU-programming and computational training on our supercomputers. Nearly 350 users have accessed the service.

easyDMP (data planning)

Data planning is essential to all researchers who work with digital scientific data. The easyDMP tool is designed to facilitate the data management process from the initial planning phase to storing, analysing, archiving, and finally sharing the research data at the end of your project. Presently, easyDMP features 1526 users and 1598 plans from 256 organisations, departments or institutions.

However, creating a plan is not the only challenge in research data management. Plans need to be reviewed, shared, executed and much more. In 2023, we started an internal brainstorming regarding functionalities for data management tools to most suitably cover the needs of researchers, providers and funders. The actionability of data management rules is becoming more and more of a requirement in data-driven science. And, of course, this is especially true in the AI era. This work will proceed in 2024.

NIRD Service Platform

With an increasing volume of research data and technology possibilities, the NIRD Service Platform has become essential for many research environments. This is because researchers can now launch services and consume data through services directly on the NIRD storage infrastructure without moving data elsewhere. In 2023, we launched the service platform on the new NIRD and migrated all the services onto the new infrastructure.

Furthermore, in 2023 we have seen a steady increment of services launched by end-users, such as community-specific portals, analytical platforms or tailored AI algorithms. The mixed CPU/GPU architecture of the NIRD Service platform is optimised to run data-intensive computing workflows, such as pre-/post-processing, visualisation and AI/ML analysis, without any queuing time! In 2023, the average allocation on Kubernetes CPUs was 1,055 (on a total of 1,024 physical cores in production). The highest Kubernetes CPU allocation was 1,180 cores.

NIRD Data Storage

Together with the national supercomputers, the national storage system NIRD forms the backbone of the national e-infrastructure for research and education in Norway.

In

2023 we went into full production with the new NIRD procured and tested the year before. But 2023 has also been the year of the first expansion of the new NIRD, which now features a total capacity of 49 PB distributed over two systems placed in Lefdal Mine Datacenter. The two systems are physically separated and have different characteristics to serve a variety of use cases.

One system is a high-performance storage service tailored for scientific projects and investigations. It accelerates data-driven discoveries by providing a platform optimized for pre/post-processing, intensive I/O operations, supporting high-performance computing, and accommodating AI workloads.

The other system is a cost-optimized storage service designed for sharing and storing data during and beyond project lifetimes. Offering unified file and object storage with S3, NFS, and POSIX access, it strikes the right balance between performance and cost.

These two hardware components pave the way for two diverse storage services, set to officially launch in 2024.

NIRD Toolkit

The NIRD Toolkit is a Research Platform to easily spin off software on-demand on the NIRD Service Platform, thus avoiding cumbersome IT operations. With the NIRD Toolkit, computations on Spark, R-Studio, and several popular artificial intelligence algorithms are possible in a few clicks.

From 2023, the NIRD Toolkit has a renewed skin and a new authentication mechanism for a more secure and friendly user experience.

Research Data Archive

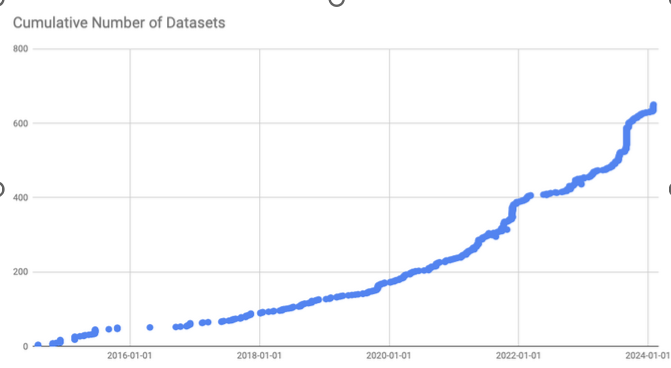

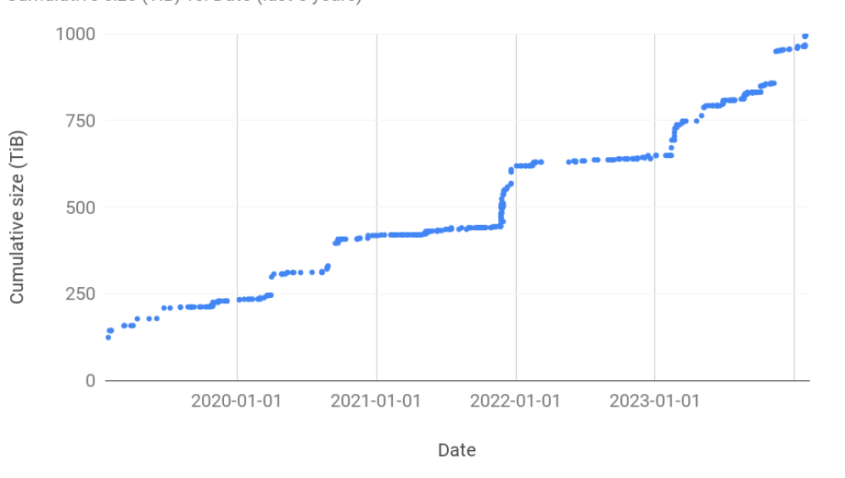

In 2023, 205 new datasets were deposited and published in the Research Data Archive, increasing the volume of the archived data by more than 300 TB. And this is only in one year!

The increasing FAIR and data management awareness of researchers has resulted in an increment in the number of datasets deposited during the last years (see Figure X and Figure Y below). The increasing trend is clear besides the temporary stans in 2022 due to the limited capacity of the old NIRD.

The data landscape in research is quickly changing and therefore also the archiving and publishing concept. The Open Science paradigm and FAIR principle have contributed to making datasets discoverable and accessible openly at large. Furthermore, new technologies and methodologies, such as AI/ML, are challenging old paradigms.

Already in 2021, we launched a project to design, procure and implement the next-generation Research Data Archive under the principles of user-friendliness, FAIR and interoperability by design. In 2023, we signed a contract with a new collaboration partner and procured the resources to develop the new Research Data Archive. The implementation started in October 2023 and will be finished by summer 2024. By then, all users will experience a freshly new, AI-ready and FAIR-by-design archive, all set to meet your future needs.

Sensitive Data Services (TSD)

Sensitive Data Services (TSD) is a service delivered by the University of Oslo and provided as a national services by Sigma2.

TSD offers a remote desktop solution for secure storage and high-performance computing of sensitive personal data. It is designed and set up to comply with the Norwegian regulations for individuals’ privacy.

TSD has been a part of the national e-infrastructure since 2018. We own 1600 cores in the HPC cluster. We also own 1370 TB of the TSD disk, which delivers storage for general use or HPC use. Furthermore, GPUs and high-memory nodes are available to support the most demanding statistical analysis workflows, such as those required by personalised medicine.

Advanced User Support

Advanced User Support (AUS) is when we benefit from letting the experts within NRIS spend dedicated time going deeper into the researchers’ challenges. The effects are often of high impact, and the results are astonishing.

In 2023 the AUS activities included some very interesting collaborative projects, covering advanced usage across nearly all our infrastructure and services, from TSD (sensitive data) to LUMI and NIRD.

We also want to highlight the AUS User Liaison collaborations. This framework allows experts to have a foot inside the NRIS activities while maintaining a close tie to specific application communities, major research infrastructures and centres of excellence.

Basic User support

Basic user support provides a national helpdesk that deals with user requests, such as issues relating to access, systems usage or software. Support tickets are handled by highly competent technical staff from NRIS.

The number of cases handled in our basic user support service continued to increase in 2023. Our user survey, which is conducted yearly to get feedback from the users and improve the support services, shows high satisfaction among our users.

A glimpse of what is coming

A sneak peek of what´s to come can be found in our services roadmaps. For data storage, we are focusing on several key areas. We are developing and launching a new cold-data storage service, designed to provide a cost-effective solution for storing data that is not frequently accessed but needs to be retained for future use. We are also working on enabling the dispatch of jobs from the NIRD Service Platform to HPC to facilitate the scaling of work processes, allowing for more efficient and effective data processing.

In addition, the first version of the next-generation Research Data Archive is being developed and implemented. This new version will incorporate the latest advancements in data archiving technology, ensuring that our services remain at the forefront of the field.

Turning to High-Performance Computing (HPC), our focus is on improving the integration of HPC and NIRD storage. This will enhance the efficiency of data transfer between the two systems, leading to faster and more seamless data processing.

7. Fast-tracking the digital transformation of industry

National Competence Centre for HPC

The National Competence Centre (NCC) aids the industry, public and academic sectors by tapping into the competencies needed to take advantage of technologies such as High-Performance Computing (HPC), Artificial Intelligence (AI) and Machine Learning (ML), and associated technologies. The Centre is a collaboration between Sigma2, SINTEF and NORCE and aims to offer expertise and access to the national e-infrastructure services.

2023 saw a breakthrough in our project portfolio, with our first project aiding public administration in adapting HPC and leveraging advanced ICT. The project Safer borders with Artificial Intelligence with Norwegian Customs, was interesting in many ways. Here, we could impact how data is used within public administration and address the challenge related to working with data subject to the General Data Protection Regulation (GDPR).

Streamlining customs declarations with automated data cleaning

Custom declarations are received by Norwegian customs through manually filled forms online. Humans often provide data inconsistency, such as reversing the order of names or addresses or making simple typos. Unreliable data hinders analysis and trend identification, crucial for customs agents. Therefore, data cleaning is the first step. Manually cleaning millions of customs declarations yearly is impractical. Our project with customs demonstrated tools for automatic data cleaning.

Built-in visualisation features allowed us to see how different algorithms affected data clustering and correction. This enabled quick adjustments to parameters, algorithm weights, and feature focus for improved accuracy and faster algorithm tweaking. Visualisation also helps explain data acquisition and refinement processes in court if litigation arises.

Secondly, we supported Customs in implementing the proof of concept in a workflow for continuous integration to allow improved algorithm tuning to be automatically pushed to their data analytics platform for immediate use by the customs intelligence department.

Bubble curtains against hurricanes

During a particular project with Oceantherm, which you can read more about below, the partnership with the independent research institutes demonstrated its importance in pairing advanced ICT with domain competence.

Here CFD and digital workflows were required to simulate and demonstrate the practical viability of a project so unthinkable as gaining control of the energy potential in hurricanes through novel use of bubble curtains.

Utilising HPC and innovative technology to mitigate hurricane impacts

Spreading the word

Gaining visibility is crucial for the NCC. This is achieved by sharing project success stories and writing articles that highlight innovative uses of HPC or ICT. These articles aim to engage potential customers by showing how these technologies could apply to their situations.

It is also important for the NCC to attend conferences as speakers, to address potential customers by sharing success stories and talking about the services the NCC provides.

The most important conferences we attend are hosted by partners like the NORA network for national AI audience, or regional industry clusters like Thamsklyngen or Næringsforeningen i Trondheimsregionen.

Looking to 2024, the NCC will continue to gain experience from helping industry and public administration and use an analytic approach to understand how the centre can be the decisive factor for the increased use of HPC and complement the national e-infrastructure services for HPC.

8. Collaborations out and beyond

To keep up to speed with global digital developments, international collaborations are necessary to ensure Norwegian access to more powerful computing resources than we have available domestically.

Through Sigma2's international partnerships, Norwegian researchers can access services, including computing time on large-scale systems.

EuroHPC Joint Undertaking

There is a lot of activity from EuroHPC Joint Undertaking (JU) in JU-initiated project calls and procurements, some directly or indirectly involving Norwegian institutions. More EuruHPC systems are becoming available, which again means more HPC resources that Norwegian researchers can apply for. The construction of JUPITER, Europe’s first ARM-based exascale HPC system located and operated by the Jülich Supercomputing Centre (JSC) in Germany, is on the way and will be operational by the end of 2024. The next EuroHPC exascale system will be Jules Verne, hosted by GENCI, the French national agency for High-Performance Computing. But, until these new beasts are up and running, LUMI, the Queen of the North, will continue to be the most powerful supercomputer in Europe. More on LUMI below.

Additionally, in 2023 the area of quantum computing saw 6 projects for EuroHPC quantum computers awarded funding, among them LUMI-Q, where Norway is participating with Sigma2, Simula and SINTEF. Several of these systems will become available for researchers in 2024. The EuroHPC National Competence Centres, now in its second phase, and with the Norwegian Competence Centre headed by Sigma2, has helped Norwegian industry and public administration in groundbreaking projects utilising HPC and AI. More on these things below as well.

Norway is on the JU’s Governing Board represented by the Research Council and Sigma2 supports the Research Council as advisers. As the national e-infrastructure provider, Sigma2 is responsible for implementing Norwegian participation in EuroHPC. This arrangement has been working very well, in large thanks to a very good collaboration with the Research Council and Sigma2’s experience through participating in PRACE and other European projects for many years.

PRACE — Partnership of Advanced Computation in Europe

The dawn of a new prace

PRACE is transforming from a partnership of countries’ national HPC providers awarding research projects access to compute resources on the systems of hosting members to an association of HPC users and stakeholders of many flavours. In the “new” PRACE, everything from big HPC centres, via universities and companies, to individual users come together for common interests.

The transformation is a consequence of the new ecosystem for HPC in Europe, where the EuroHPC Joint Undertaking is currently delivering compute resources in Europe but not covering all aspects of the HPC ecosystem. The “new” PRACE will also aim to foster the creation of an HPC user association for academics, industrial users, and HPC centres in Europe. In this new role, PRACE will continue to be an essential part of the European HPC ecosystem, hopefully representing a much stronger voice of the users.

NeIC — Nordic e-Infrastructure Collaboration

Development of best-in-class e-infrastructure services beyond national capabilities

Sigma2 is a partner in NeIC – the Nordic e-Infrastructure Collaboration – bringing the national e-infrastructure providers in the Nordics and Estonia together. Hosted by NordForsk, which facilitates cooperation on research and research infrastructure across the Nordic region, NeIC is based on strategic collaboration between the partner organizations, CSC (Finland), NAISS (Sweden), Sigma2 (Norway), DeIC (Denmark), RH Net (Iceland) and ETAIS (Estonia).

The 10-year MoU, Memorandum of Understanding (MoU), underpinning NeIC was set to expire in 2023 but has been extended through 2024 while work is ongoing for reworking and renewing the basis for the NeIC organisational structure and funding, along with a new MoU.

Some highlights of NeIC projects with NRIS participation in 2023:

CodeRefinery 3

The goal of the CodeRefinery project is to provide researchers with training in the necessary tools and techniques to create sustainable, modular, reusable, and reproducible software, thus CodeRefinery acts as a hub for FAIR (Findable, Accessible, Interoperable, and Reusable) software practices. Phase three of CodeRefinery was started in 2022.

The result of this project is training events, training materials and training frameworks, as well as a set of software development e-infrastructure solutions, coupled with necessary technical expertise, onboarding activities and best practices guides, which together form a Nordic platform. In 2023, CodeRefinery conducted training events and arranged workshops for over 800 people.

Puhuri 2

Puhuri is developing seamless access to the EuroHPC LUMI supercomputer, as well as to other e-infrastructure resources used by researchers, public administration and industry. The project will continue to develop and deploy trans-national services for resource allocation and tracking, as well as federated access, authorisation, and group management. The portals are integrated into the Puhuri Core system, which propagates the resource allocations and user information to the LUMI EuroHPC supercomputer. Usage accounting information from LUMI flows back to the allocator’s portals, providing near real-time statistics about utilisation.

Nordic Tier-1

In the Nordic Tier-1 (NT1), the four Nordic CERN partners collaborate through NeIC and operate a high-quality and sustainable Nordic Tier-1 service supporting the CERN Large Hadron Collider (LHC) research programme. It is an outstanding example of promoting excellence in research in the Nordic Region and globally, and it is the only distributed Tier-1 site in the world. There are 13 Tier-1 facilities in the Worldwide LHC Computing Grid (WLCG), and their purpose is to store and process the data from CERN. The Nordic Tier-1 Grid Facility has been ranked among the top three Tier-1 sites according to the availability and reliability metrics for the ATLAS and ALICE Tier-1 sites.

By combining the independent national contributions through synchronised operation, the total Nordic contribution reaches a critical mass with higher impact, saves costs, pools competencies and enables more beneficial scientific returns by better serving large-scale storage and computing needs.

EOSC — The European Open Science Cloud

The European Open Science Cloud´s (EOSC) ambition is to provide European researchers, innovators, companies and citizens with a federated and open multi-disciplinary environment where they can publish, find and reuse data, tools and services for research, innovation and educational purposes. EOSC supports the EU’s policy of Open Science and aims to give the EU a global leader in research data management and ensure that European scientists enjoy the full benefits of data-driven science.

Since 2017, several projects have been working to define and establish policies and infrastructure to form the EOSC. Sigma2 and our partners through NRIS, have offered competencies and contributed to the EOSC since the first-generation projects (starting from EOSC-hub in 2018). Sigma2 supports the partnership of Norwegian institutions with the EOSC association by coordinating activities related to delivering services and infrastructure to the EOSC, possibly in synergies with activities happening in NeIC and under the EuroHPC Join Undertaking umbrella.

Skills4EOSC

With HK-Dir, the Directorate for Higher Education and Skills in Norway, Sigma2 became a partner in the Skills4EOSC project in 2022. The project joins knowledge of national, regional, institutional and thematic Open Science (OS) and Data Competence Centres from 18 European countries to unify the current training landscape into a joint and trusted pan-European ecosystem.

The goal is to accelerate the upskilling of European researchers and data professionals within FAIR and Open Data, intensive data science and Scientific Data Management.

DICE — Data Infrastructure for EOSC

This is the last H2020 INFRAEOSC-07a2 project which are supporting the implementation of EOSC with e-infrastructure resources for data services.

DICE has been enabling a European storage and data management infrastructure for EOSC, providing generic services to store, find, access, and process data consistently and persistently while enriching the services to support sensitive data and long-term preservation. Through this project, Sigma2 has offered storage and sensitive data service resources to the users coming from the EOSC portal, thus supporting cross-border collaboration between Norwegian and European scientists.

In 2023, Norway offered 50 TiB of NIRD Storage to EOSC through DICE for users who request access to the resources through the EOSC Virtual Access mechanism. DICE has now ended.

ENTRUST

In 2023, Sigma2 in collaboration with the national partners, ELIXIR Norway, UiO, UiB, NTNU, and several international partners, contributed to the submission of a proposal in response to a call under the INFRA-EOSC program. The scope of the call was the establishment within EOSC of a network of sensitive data services. The submitted proposal, led by ELIXIR in collaboration with the EUDAT CDI, was granted and the ENTRUST project will start in 2024.

The project aims to collectively design and demonstrate the interoperability layer to support a federation of sensitive data services in Europe. Sigma2, with EUDAT partners, will investigate the sustainability model for such a possible federation in collaboration with the partner universities, offering the services and developing the interoperability layer. If successful, this will make using sensitive data services much more efficient for the users. This also relates to ongoing national work with Trusted Research Environment (TRE), building on the valuable experience from the Sensitive Data Services (TSD) at UiO, but now collaboration beyond TSD with UiB and NTNU.

LUMI

The LUMI pre-exascale system, where Norway owns a stake through Sigma2, is getting stronger by the day and helping researchers achieve outstanding results. In 2023, there was yet another system upgrade, which now includes a mind-boggling 10 240 AMD MI250X. LUMI has seen very high utilisation for most of 2023, and with the increased activity within the field of artificial intelligence, queuing times have been seen to increase. Several Norwegian projects have success stories from using LUMI, with compute resources allocated from the national share or the EuroHPC JU’s system share. Some of these projects are highlighted in this yearly report.

NRIS has participated actively in the LUMI Consortium, providing two experts to the LUMI User Support Team (LUST) and experts to the various interest groups, and Sigma2 is a member of the Operational Management Board (OMB). With increased activity, the LUMI User Support Team and the two NRIS members dedicated to this team have been busy supporting users and organising training events.

9. Behind the curtain — Meet the team

The Norwegian research infrastructure services (NRIS) is a collaboration between Sigma2 and the universities of Bergen (UiB), Oslo (UiO), Tromsø (UiT The Arctic University of Norway) and NTNU to provide national supercomputing and data storage services. These services are operated by NRIS and coordinated and managed by Sigma2.

The people behind the Norwegian research infrastructure services.

Comprising employees from Sigma2 and IT experts from partner universities, NRIS forms a geographically distributed competence network. This ensures researchers have swift and easy access to domain-specific support.

In 2023, there was an increased emphasis on organising teams to group competencies and responsibilities, aiming to better support researchers using the Norwegian research infrastructure services.

At the end of 2023, NRIS accounted for 53 full-time equivalents and around 80 members.

Overview of the NRIS teams and their responsibilities

Each team within NRIS has its unique responsibilities:

- The Support Team manages daily support, prioritising and escalating tickets and answering user requests.

- The Training and Outreach Team offers free training events for our users. The topic of the training is diverse.

- The Software Team The Software Team is responsible for the installation and maintenance of the scientific software and software installation environments on NRIS clusters.

- The Service Platform Team is in charge of the operation of the service platform, which is a Kubernetes cluster.

- The Infra Team operates and ensures the availability of our services.

- The GPU Team's assists researchers with GPU-accelerating and porting applications.porting applications.

- The Supporting and Monitoring Services (SMS) Team supports and monitors our internal systems.

Strategic leadership and future directions in e-infrastructure

Sigma2 coordinates and has strategic responsibility for the services, including product management and development, Norway's participation in international collaborations on e-infrastructure, and administration of resource allocation.

In 2023, we worked in particular on our application to the Norwegian Research Council`s INFRASTRUKTUR program to secure funding purchasing a new supercomputer to replace Betzy and Saga in the future.

We will continue to focus on strategic work going forward, generally to ensure that researchers in Norway continue to have access to state-of-the-art e-infrastructure services, and specifically to prepare to undergo a new evaluation in 2025.

By the end of 2023, Sigma2 counted 16 employees, of which 31 % were women. In 2024, we will continue to strive for diversity and gender balance and work to promote equality in our organisation, committees, and the Board of Directors.

Our activities are jointly financed by the Research Council of Norway and the university partners (University of Bergen, Oslo, Tromsø and NTNU).

The Board of Directors

Our board is chaired by our parent company Sikt´s Director for the Data and Infrastructure Division. The six other board members are from the four consortium partners, including two external representatives from abroad and a director general from a national research institute, respectively.

The board in 2023

- Tom Røtting (Chair), Director for the Data and Infrastructure Division, Sikt

- Arne Smalås, Dean, Faculty of Science and Technology, UiT

- Ingelin Steinsland, Vice Dean of Research, NTNU

- Solveig Kristensen, Dean, The Faculty of Mathematics and Natural Sciences, UiO

- Gottfried Greve, Vice-Rector, UiB

- Ingela Nystrøm, Professor, Uppsala University

- Kenneth Ruud, Director General, Norwegian Defence Research Establishment (FFI)