The next national HPC and AI/ML platform

In the spring of 2024, HPE Hewlett-Packard Norge AS (HPE) won the competition and was awarded the contract, which has a value of 225 million NOK. The new supercomputer, Olivia, will be Norway´s most powerful yet. She will be installed in Lefdal Mine Data Centers during the spring of 2025.

Here's all you need to know about Olivia.

About the project

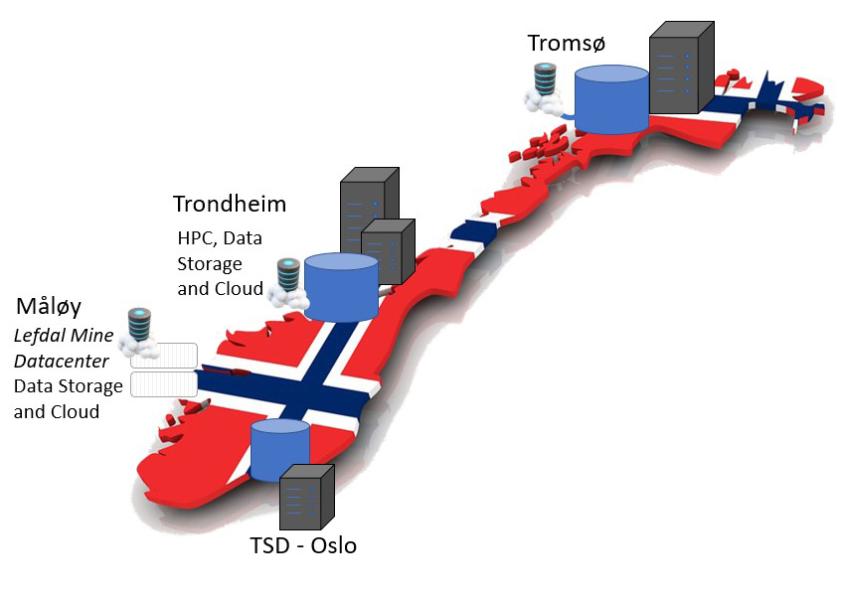

This project's objective is to acquire and implement the next-generation national HPC resource (supercomputer) in the Sigma2 e-infrastructure portfolio. The working name for this resource is A2. A2 will be placed in Lefdal Mine Datacenter, the same location as the new national storage infrastructure (NIRD).

Project goals

- Replace current HPC machines Fram and Saga and additional usage growth forecast.

- Provide computing capability for AI/ML and scientific applications through GPU/CPU.

- Procure a system with expandable computing and storage capacity.

Public Procurement Process

The procurement process has been carried out in line with rules for public procurement. The procedure was carried out in two stages, with an initial qualification phase, followed by a tender and negotiation phase where qualified suppliers were allowed to submit their offers.

Installation

The installation will take place during the spring and summer of 2025 and will be opened to Norwegian researchers during the autumn of 2025. This will be Sigma2's first supercomputer installed in our data hall at Lefdal Mine Datacenter (LMD).

Background

HPC systems generally have a life span of approximately 5 years due to declining energy efficiency compared to newer machines, obsolete parts, lack of support, and the arrival of new technology.

Our procurement strategy has traditionally been two-legged, an A- and B-leg where we acquire systems with an offset of 2-3 years.

Hewlett-Packard Norge AS has won the contract (May 2024).

Read more about the contract and the technical specifiations in our press release: Huge Contract Awarded —Norway's Most Powerful Supercomputer is Coming Soon