Welcome to the annual report 2022 of Sigma2 and NRIS!

Sigma2 collaborates with the Universities in Bergen, Oslo, Tromsø, and NTNU to provide the Norwegian research infrastructure services (NRIS). Below, you will find highlights, numbers and facts from our joint work in 2022. We are particularly proud to present some of the research projects that have benefited from the national services over the past year. It is pleasing that the demand for our services constantly increases and that projects from new subject areas are applying for allocation. We hope you enjoy the report. Happy reading!

Managing Director`s corner

2022 was a busy year for Sigma2 and the Norwegian research infrastructure services (NRIS).

To highlight some happenings, we celebrated the official inauguration of NIRD, the new Norwegian Infrastructure for Research Data, in Lefdal Mine Datacenter. We are eager to see the results of outstanding Norwegian research supported by the unique data storage infrastructure in the coming years with a lot more data made available for re-use.

Supercomputers use a lot of electricity and can leave a significant carbon footprint unless we look for green solutions. We are thus looking forward to installing more national systems in Lefdal Mine Datacenter in the future. With its renewable energy source close to the facility, this is one of Europe's greenest data centre solutions.

Energy reuse will also be in place by the time our next supercomputer is set into production. In Finland, LUMI's data centre in Kajaani gets all its electricity from 100% renewable hydropower, and the waste heat is used in the district heating network. This makes the total carbon footprint of LUMI negative.

In June, we saw another grand inauguration when the LUMI supercomputer, where Norway has a dedicated share, was officially opened in Finland.

As the 3rd fastest supercomputer in the world, we expect to see ground-breaking research supported by LUMI in the years to come, both from Norwegian and European scientists.

We have worked continuously to improve our services and user experiences. Our new Research Data Archive is underway, and our resource administration system and the NIRD Toolkit were considerably enhanced in 2022. For a brief recap of key events, watch my short video. And if you wish to indulge in all the details, there is plenty to delve into below!

The researchers — Who are they?

Constant development in technology and digital working methods means that more and more scientific disciplines need high-performance computing and storage services to carry out research. In 2022, we saw almost 600 projects and 2,400 users on the national systems, which together were granted 1.8 billion CPU hours and over 24 petabytes.

Like previous years, Geosciences is still the largest consumer of computing resources, while Climate research tops the list for storage needs. Below you can see the list of the top 10 HPC projects and top 10 storage projects in terms of allocated resources. You also find diagrams showing the largest users in terms of scientific field and institution.

Top 10 HPC projects

- Solar Atmospheric Modelling

- Project leader: Mats Carlson

- Institution: Rosseland Centre for Solar Physics, University of Oslo

- Field of science: Physics

CPU hours: 216 million

- Combustion of hydrogen blends in gas turbines at reheat conditions

- Project leader: Andrea Gruber

- Institution: SINTEF

- Field of science: Computational Fluid Dynamics

CPU hours: 122 million

- Quantum chemical studies of molecular properties and energetics of large and complex systems

- Project leader: Trygve Helgaker

- Institution: Department of Chemistry, University of Oslo

- Field of science: Chemistry

CPU hours: 85 million

- Bjerknes Climate Prediction Unit

- Institution: Bjerknes Climate Prediction Unit, University of Bergen

- Project leader: Noel Keenlyside

- Field of science: Geosciences

CPU hours: 77 million

- Heat and Mass Transfer in Turbulent Suspensions

- Project leader: Luca Brandt

- Institution: Department of Energy and Process Engineering, NTNU

- Field of science: Computational Fluid Dynamics

CPU hours: 74 million

- Submarine landslides and their impacts on offshore infrastructures

- Project leader: Quoc-Anh Tran

- Institution: Department of Civil and Environmental Engineering, NTNU

- Field of science: Geosciences

CPU hours: 72 million

- Mapping peripheral protein-membrane interactions for a better description of the protein-lipid interactome

- Project leader: Nathalie Reuter

- Institution: Department of Chemistry, University of Bergen

- Field of science: Biosciences

CPU hours: 47 million

- Extreme planetary interiors

- Project leader: Razvan Caracas

- Institution: Department of Geosciences, University of Oslo

- Field of science: Geosciences

CPU hours: 38 million

- 3D forward modelling of lithosphere extension and inversion

- Project leader: Ritske Huismans

- Institution: Department of Earth Science, University of Bergen

- Field of science: Geosciences

CPU hours: 34 million

- Fundamental QCD Green's functions - dynamical lattice simulations at finite temperature

- Project leader: Paulo Silva

- Institution: Center for Physics, University of Coimbra

- Field of science: Physics

Silva`s project received CPU hours under the PRACE DECI Distributed European Computing Initiative. More information about DECI in the section about International Collaborations below.

CPU hours: 33 million

Top 10 storage projects

- Storage for nationally coordinated NorESM experiments

- Project leader: Mats Bentsen

- Institution: NORCE

- Field of science: Climate

Usage: 1 935 TB

- Bjerknes Climate Prediction Unit

- Project leader: Noel Keenlyside

- Institution: University of Bergen

- Field of science: Climate

Usage: 995 TB

- Storage for INES - Infrastructure for Norwegian Earth System modelling

- Project leader: Mats Bentsen

- Institution: NORCE

- Field of science: Climate

Usage: 855 TB

- High latitude coastal circulation modeling

- Project leader: Magnus Drivdal

- Institution: Akvaplan-niva

- Field of science: Geosciences

Usage: 795 TB

- Synchronisation to enhance reliability of climate prediction

- Project leader: Noel Keenlyside

- Institution: Institution: University of Bergen

- Field of science: Climate

Usage: 563 TB

- European Monitoring and Evaluation Programme

- Project leader: Hilde Fagerli

- Institution: Norwegian Meteorological Institute Field of science

- Field of science: Geosciences

Usage: 465 TB

- Atmospheric Transport – Storage

- Project leader: Massimo Cassiani

- Institution: NILUs tjenester for industri og næringsliv

- Field of science: Geosciences

Usage: 451 TB

- Norwegian Earth System Grid data services

- Project leader: Mats Bentsen

- Institution: NORCE

- Field of science: Geosciences

Usage: 408 TB

- Key Earth System Processes to understand Arctic Climate Warming and Northern Latitude Hydrological Cycle Changes

- Project leader: Michael Schulz

- Institution: Norwegian Meteorological Institute

- Field of science: Climate

Usage: 397 TB

- Impacts of Future Sea-Ice and Snow-Cover Changes on Climate, Green Growth and Society

- Project leader: Noel Keenlyside

- Institution: University of Bergen

- Field of science: Geosciences

Usage: 393 TB

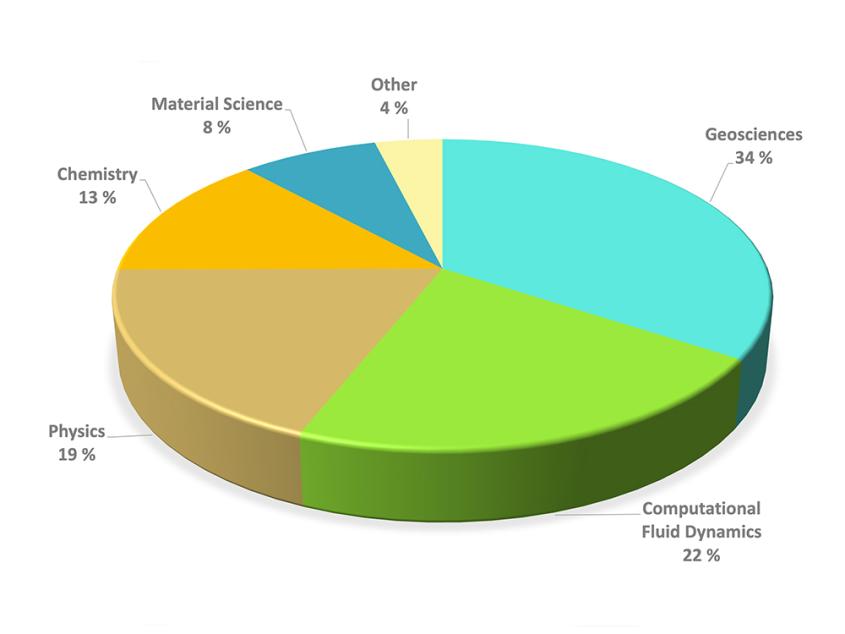

Top HPC users: field of science

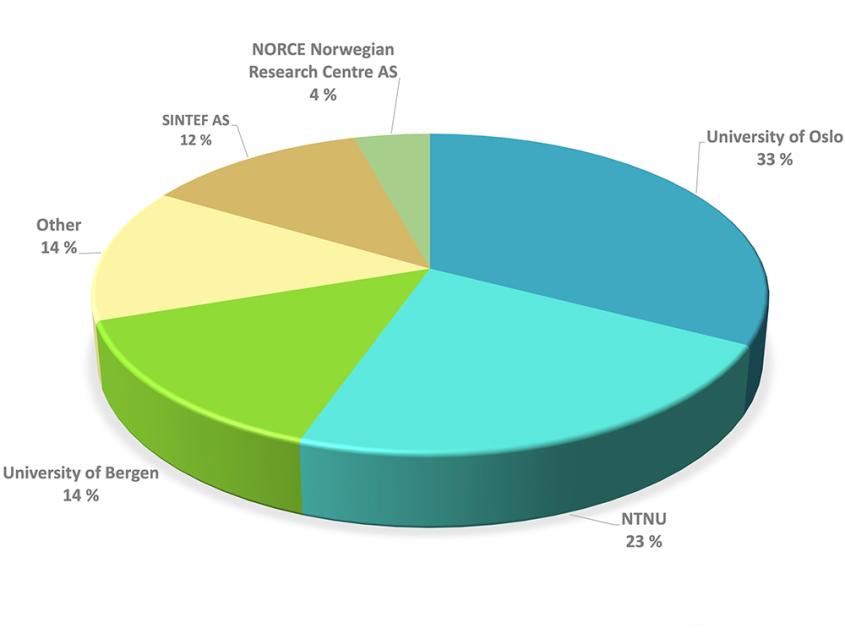

Top HPC users: by institution

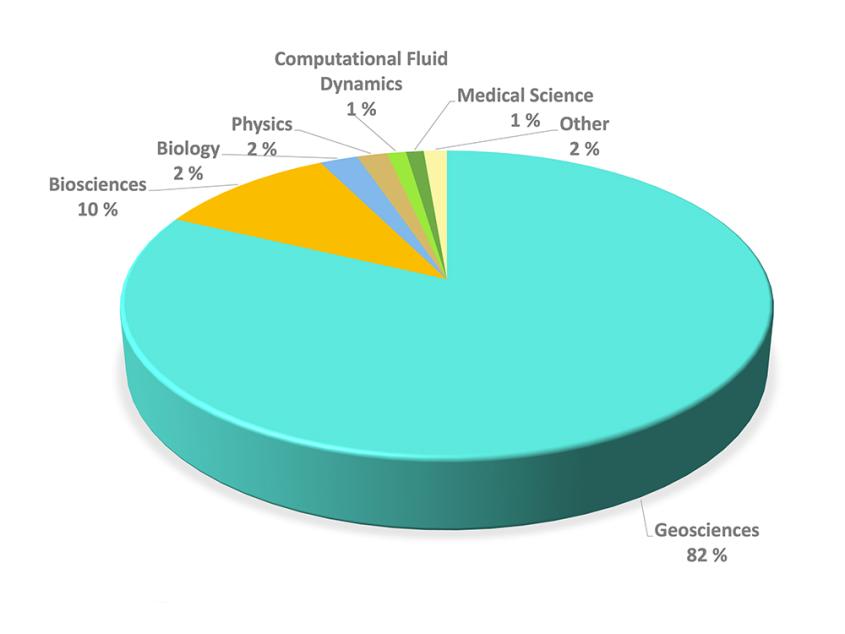

Top storage users: field of science

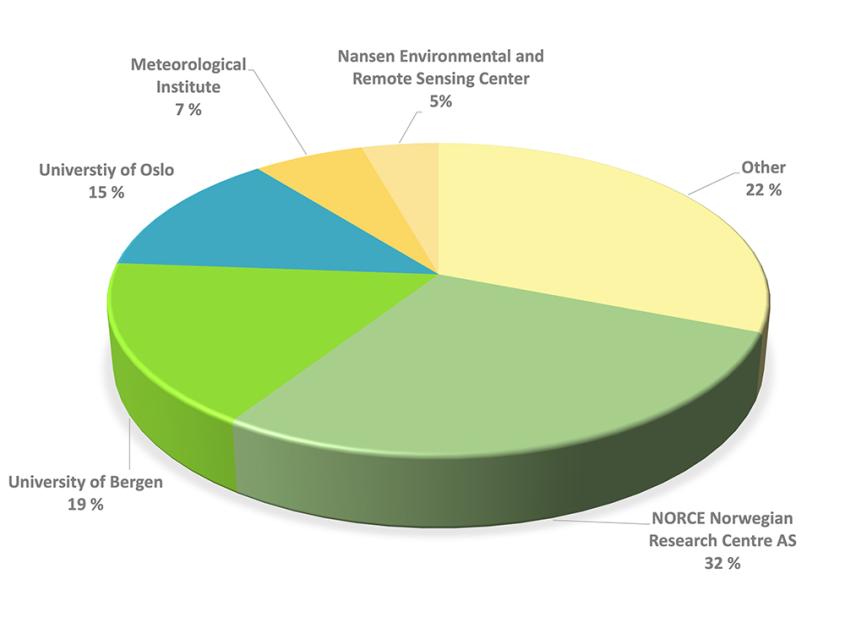

Top storage users: by institutions

Research for a better future

Looking beyond our business, we see that 2022 was a challenging year: Russia’s devastating war on Ukraine, the European energy crisis, and last summer´s heat waves and droughts on the continent. On a brighter note, the Covid-19 pandemic was finally called off. For that, we can thank science and technology. The speed at which the vaccines were developed would not have been possible without supercomputers. In late autumn, the world was introduced to the new Artificial Intelligence tool ChatGPT, a model trained by machine learning to interact with humans. Many expect this to revolutionise our society, and we are excited to see what more will come from this.

The world today is driven by technology in almost every aspect. We look to science and technology to slow down the pressing matter of climate change to create a green and sustainable future for us and the coming generations. To do this, we must combine knowledge from many science fields with groundbreaking research and technologies. We are immensely proud of the meaningful research enabled by our national e-infrastructure services. We trust that the research projects will result in new and valuable knowledge.

Please enjoy this small selection of highlighted projects!

How do we ensure fair allocation of resources?

Demand for Sigma2´s resources and services is continually increasing as more disciplines depend on HPC and large-scale data management to conduct research.

For many researchers, this entails applying for access to the national supercomputers Betzy, Fram and Saga, NIRD - the storage system for scientific research data, and the new European supercomputer LUMI, of which Sigma2 owns parts of the resources.

But how do we ensure a fair allocation of the national resources? And who gets to decide which projects are awarded access? Watch our short video to learn more about the Resource Allocation Committee.

Our services

Click the headings to learn about our services and discover what was new in the past year.

High-performance computing

During the Covid-19 pandemic, we made resources on the national supercomputers available for Norwegian Institute for Public Health to calculate infection spread and expected vaccine effects. As the pandemic faded away in 2022, also in terms of HPC, we could gradually free up more resources for other research and return to scientific production.

In 2022, we made no HPC hardware investments for the national e-infrastructure. However, during the year, the LUMI CPU quota (LUMI-C) was ready for production, while the GPU share of the machine received extensive pilot testing. At the end of the year, LUMI-G was made ready for production and welcomed by our GPU community.

Previous expansions of the HPC systems have provided sufficient CPU resources, yet GPU resources are in high demand. Even with the additional capacity from the LUMI GPUs, there will be a need for near-future expansions, near-future expansions will be needed.

In 2022, we commenced the process of acquiring Norway’s next supercomputer. Among other things, we have worked to gain insight into what type of resources it will be essential for Norwegian researchers to gain access to in the years ahead. We expect to complete the public procurement process by the end of 2023 and set the new HPC system in production during 2024.

NIRD Storage

Together with the national supercomputers, the national storage system NIRD forms the backbone of the national e-infrastructure for research and education in Norway.

In 2022 we installed and tested the new national storage infrastructure for research data. The procurement was completed late the year before. Like its predecessor, the new storage system is named NIRD – the National Infrastructure for Research Data. The new NIRD consists of IBM technology and is the first national e-infrastructure system installed the space we rent at Lefdal Mine Datacenter. The new NIRD has a capacity of 32 petabyte (PB) and can be expanded to a whopping 70 PB if needed. In comparison, old NIRD had a capacity of 22 PB. The capacity will cover current and future needs for data storage under the paradigm of Open Science and FAIR data. NIRD was officially inaugurated in December 2022.

Now, researchers in Norway can look forward to state-of-the-art storage services that support artificial intelligence, machine learning, data-intensive analysis and archiving.

easyDMP (Data planning)

Data planning is essential to all researchers who work with digital scientific data. The easyDMP tool is designed to facilitate the data management process from the initial planning phase to storing, analysing, archiving, and finally sharing the research data at the end of your project. Presently, easyDMP features 1377 users and 1416 plans from 246 organisations/departments/institutions.

EasyDMP is a step-by-step tool supporting several templates. In addition to templates following the Horizon2020 and Science Europe recommendations, easyDMP supports institution-specific templates with prefilled answers and easy-to-use guides.

We collaborate with the EOSC Nordic project and the Research Data Alliance to keep improving the tool. In 2022, we used the easyDMP as a testbed to design and prototype the concept of machine-actionable DMP. As part of EOSC-Nordic, work was done on implementing support for importing and exporting DMPs that conform with the RDA machine-actionable DMP schema. Plans can now be exported and imported between tools, as long as they conform to the schema. Integration between easyDMP and the Data Stewardship Wizard also moved forward, and plans can be exported from Elixir’s Data Stewardship Wizard following the Research Data Alliance schema. The next step is to import the plan into easyDMP.

The easyDMP Service

NIRD Service Platform

The NIRD Service Platform is essential for many research environments consuming data on NIRD storage through services. In later years, the popularity of the Service Platform has grown as increasing data volumes are often more convenient to analyse and pre/post-process “locally”, i.e., directly on the storage infrastructure without moving data elsewhere. Furthermore, the possibility of spin-off community-specific portals to expose large datasets to external users allows sharing and reusing of data in line with the current policies and best practices in research. Services can run permanently or on-demand through the NIRD Toolkit, which has been improved during 2022.

The NIRD Service Platform consists of two clusters with computing resources, orchestrated by a Kubernetes engine with a CPU/GPU architecture to enable services and computing environments to run data-intensive computing workflows, such as pre-/post-processing, visualisation and AI/ML analysis. In 2022 a new instance of the NIRD Service Platform was procured and installed in Lefdal Mine Datacenter to provision services on the new generation NIRD storage infrastructure. This instance will be put into production jointly with the new NIRD in 2023. In 2022, the average allocation on Kubernetes CPUs was 763 (on a total of 576 physical cores). The highest Kubernetes CPU allocation was 834 cores.

The NIRD Service Platform

NIRD Toolkit

The NIRD Toolkit is a Research Platform to easily spin off software on-demand on the NIRD Service Platform, thus avoiding cumbersome IT operations. With the NIRD Toolkit, computations on Spark, R-Studio, and several popular artificial intelligence algorithms are possible in a few clicks. In 2022 the authentication and authorization mechanisms were modified to make the access and administration of roles easier for all the end users, regardless of their affiliation. Furthermore, the security of the service has been increased together with the user-friendliness of the web-based front end, for a better user experience.

NIRD Research Data Archive

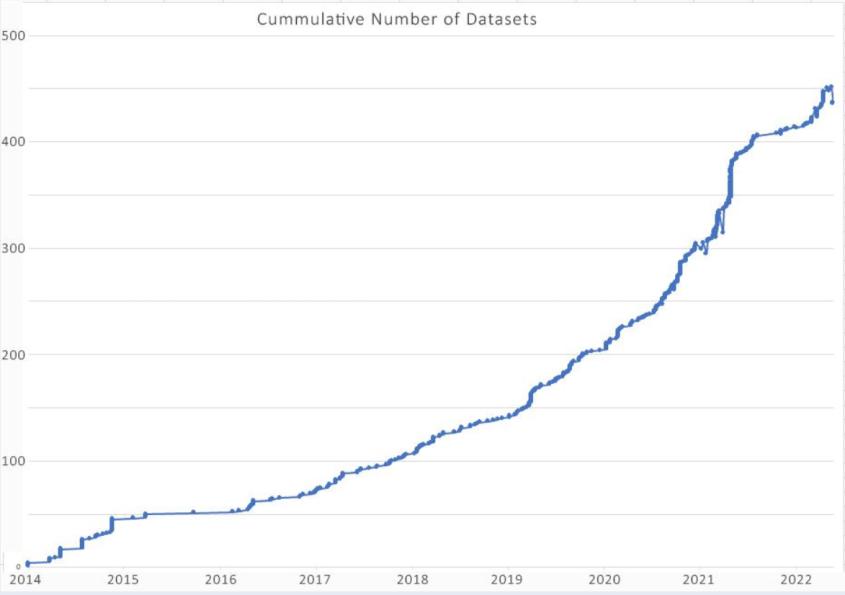

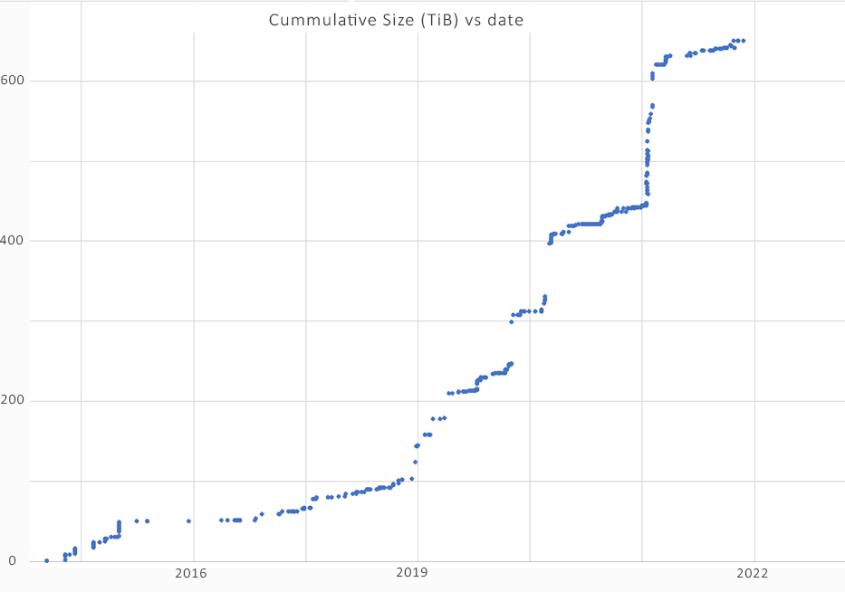

The current Research Data Archive came into service in June 2014 and has since stored 455 datasets, corresponding to almost 700 TiB in total (about 1.4 PiB, including replicas).

The increasing FAIR and data management awareness of researchers has resulted in an increment in the number of datasets deposited during the last years (see Figure X and Figure Y below). The sharp rise observed in 2021 is remarkable and probably due to many projects ending their research cycles.

In 2022, the Research Archive reached its capacity limits, and we had to temporarily close the archive of large-volume datasets due to capacity shortage. This is visible from the plateau observed in the data volume growth in 2022 (Figure X). However, the deposition of small datasets has never stopped and keeps increasing (Figure Y).

The data landscape in Norway has changed since 2014, and the need for new and more user-friendly functionalities is evident. This is why we launched a new Research Archive project in 2021. The project aims to re-design the concept for archiving within the Sigma2 scope. In 2022, the project designed the next-generation Research Data Archive’s architecture to comply with several identified use cases and use existent components and expertise. The roles of APIs and web services for integration with other systems and data sources are emphasised. Competencies and components will be procured, and the new system will be implemented in 2023.

Sensitive Data Services (TSD)

Sensitive Data Services (TSD) is a service delivered by the University of Oslo and provided as a national services by Sigma2.

TSD offers a remote desktop solution for secure storage and high-performance computing on sensitive personal data. It is designed and set up to comply with the Norwegian regulations for individuals’ privacy. TSD has been a part of the national e-infrastructure since 2018. We own 1600 cores in the HPC cluster. We also own 1370 TB of the TSD disk which delivers storage for general use or HPC use.

The TSD Service

Basic User Support

Basic user support provides a national helpdesk that deals with user requests such as issues relating to access, systems usage or software. Support tickets are handled by highly competent technical staff from NRIS. The number of cases handled in our basic user support service continued to increase in 2022.

Advanced User Support

Advanced User Support (AUS) is when we benefit from letting the experts within NRIS spend time going deeper into the researchers’ challenges. The effects are often of high impact, and the results are astonishing.

The AUS activities of 2022 span the usual wide range of topics — from bioinformatics to astroparticle physics. Here we work to improve user experience by aiding with application interfaces and making shared input data more readily available. With the extensive GPU-resources becoming available last year, especially on LUMI, our team of GPU-experts in NRIS have been highly engaged in enabling and porting codes and workflows for accelerators. We wish to maximise the footprint of Norwegian projects on these external resources and thereby the return from participating in the EuroHPC Joint Undertaking and EU programmes such as DIGITAL EUROPE.

Additionally, interest has picked up for the AUS User Liaison collaborations. This framework allows experts to have a foot inside the NRIS activities while maintaining a close tie to specific application communities, major research infrastructures and centres of excellence.

Course Resources as a Service (CRaaS)

The interest for the Course Resources as a Service (CRaaS) continued to increase in 2022. Altogether, we ran 11 different projects and no less than 448 users accessed the service.

Activities have mainly been in the field of biology, physics, GPU programming, and training in the use of HPC. The average length of the courses is 41 days. For the most part, it is supercomputers Fram and Saga that are used from CRaaS, but we have also had some activities on the NIRD Toolkit.

— Materials science has been transformed by the development of accurate prediction tools based on quantum mechanics (from first principles) and access to high-performance computing resources. The CRaaS resources make it possible to give students hands-on experience with a simulation environment that resembles those being used worldwide by professional researchers in academia, research institutes, and the industry. The teaching is thus relevant and realistic, which is made possible by CRaaS, says Ole Martin Løvvik, Chief Scientist at SINTEF and Adjunct Professor at UiO.

Practice makes perfect

NRIS has an extensive education and training program to assist existing and future users of the national e-infrastructure services. The courses aim to give the participants an understanding of our services and use the supercomputers efficiently.

Since 2021, the NRIS Training Team has conducted 7 courses, with nearly 400 participants. In addition, the NRIS training experts have contributed to four collaborative trainings that benefit 200 participants.

The NRIS Training Team has laid down a continuous effort to provide more extensive documentation and tutorials.

Informal meetings

Once a month we invite users to our open Question and Answer session. Here users meet NRIS Staff and can discuss problems, projects ideas, provide feedback and suggestions on how to improve the national services. Everyone is welcome to participate.

What do users say?

Some quotes from users who have attended NRIS training:

- Excellent course, Thank you! It will definitely give me more flexibility and independence.

- I have been user of Norwegian HPC facilities for over 15 years and of NIRD (formerly Norstore) since its beginning. I was therefore a bit concerned that the course would be too basic and not teach me anything new. But I was positively surprised about how many new things relevant to my work I learned during the course each day.

- Thank you very much for such a nice and comprehensive course. It exceeded my expectations. Usually, these types of courses are hard to follow when you have little prior knowledge - this was not the case at all this time. I am very happy and learned a lot.

Speeding up the digitalisation of industry

(The video is in Norwegian).

A lot happened in 2022 for the National Competence Centre for HPC (NCC. The Centre aids the industry, public and academic sectors by tapping into the competencies needed to take advantage of technologies such as High-Performance Computing (HPC), Artificial Intelligence (AI) and Machine Learning (ML), in addition to associated technologies.

The Centre is a collaboration between Sigma2, SINTEF and NORCE, and we offer expertise and access to national e-infrastructure services.

Webinars and events

We initiated a webinar series to promote the NCC and to provide viewpoints from key players in the industry and public sector of relevance, such as Norges Bank Investment Management (NBIM), Equinor, Innovation Norway and SINTEF, to a larger audience. The most popular one this far is the NBIM webinar, where the CTO elaborated on their successful use of the commercial cloud. All webinar recordings are available on Sigma2 Youtube Channel.

Extended funding until 2025

NCC has also coordinated training events and contributed to competence mapping and overall goals of the EuroCC and CASTIEL projects. There are similar centres to the Norwegian NCC set up across Europe, initially categorised as projects with a two-year duration. The good news during the autumn of 2022 was thus that the projects got extended for another three years, meaning that the NCC will continue receiving external funding until 2025.

Collaborations

We have made a significant effort in starting to collaborate with other NCCs, such as Sweden on the business development, training portfolio and setup, and Switzerland on the use of APIs to access clusters, container services/orchestrators and dynamical infrastructure. We also supported Romania in outreach by attending conferences presenting what we have done in Norway and Croatia for resource management in national infrastructure.

Furthermore, two European Digital Innovation Hubs (EDIH) — Oceanopolis and Nemonoor, — were established in Norway last year, and we have started a collaboration.

Conference for coastal industry

At the end of the year, we arranged an industry conference for coastal industries in West Norway. Here we invited keynote speakers from NORA, Innovation Norway, the two EDIHs, and the Swedish NCC, in addition to mature industries who presented accomplishments, and lessons learned. Less mature industry companies presented paths and plans toward digitalisation that will ultimately lead to adopting and using technologies related to High-performance computing. The conference was opened by the Minister of Local Government and Regional Development, Sigbjørn Gjelsvik.

Industry use cases

The National Competence Centre for HPC is proud to have helped many companies get started with advanced technology during the year. We have helped both on land - with optimisation of crop distribution in fields - and at sea with reducing the extent of damage from hurricanes. And we have also assisted a company that produces fibreglass with deviation detection.

Anomaly detection of noisy time series

3B-the fibreglass company produces different types of fibreglass. The products are wound onto bobbins at typically 50km/h. The production is, to a high degree, automatic.

One of the remaining manual procedures is detecting breaking fibres during the winding process. Can machine learning be used for early break detection? Read on to learn more about NCC’s collaboration with 3B-the fibreglass company.

Artifical intelligent agriculture

- Company: Digifarm

- Sector: Agriculture

DigiFarm is one of Norway's leading agri-tech startups specialising in developing deep neural networks for automatically detecting field boundaries and sown areas based on high-resolution satellite data. The models are trained in several international regions, including Europe, South America, APAC (Thailand, Vietnam, and India), the USA and Canada. Training requires a large amount of image data as well as annotation data.

During the project, NCC provided Digifarm with access to HPC and performed workflow analysis to optimise the utilisation of HPC resources and graphic processing units (GPU). The project is still running, and the next step is to provide an API for HPC to allow their automation system to automatically dispatch, monitor and ensure correct workflows in the HPC environment.

Now autonomous vessels are coming

Company: Zeabuz

Sector: Transportation

The Trondheim-based NTNU spin-off company Zeabuz launched its autonomous AI-controlled vessel during the autumn of 2022. Even in a limited ocean space such as Trondheimsfjorden, it is impossible to physically test all conceivable scenarios a ferry may encounter in daily operation. A digital twin of the vessel is therefore used to digitally test for and learn from anticipated, unforeseen, and unimaginable events.

Testing and machine learning like this requires a lot of HPC power and graphic processing units (GPUs). NCC has so far aided Zeabuz in identifying a need for an API for HPC to make the test system for the digital twin run efficiently and on demand on HPC systems. The test system is developed to test the Digital twin of the autonomous vessel. Efforts for the API in this project will be coordinated with the actions in the use case with Digifarm to ensure portability and compatibility. The use case is still ongoing.

Mitigating the impacts of hurricanes

Company: OceanTherm

Sector: Climate

OceanTherm develops technology that aims to mitigate the impacts of hurricanes. The high temperature of the surface water in tropical areas is likely a key condition for hurricanes to absorb energy and grow into the higher devastating classes, causing more damage.

Deeper down in the ocean the water temperature is cooler. OceanTherm develops technology that can lift cold water to the sea surface, cooling it and thus preventing hurricanes from building in strength.

The large-scale nature of the problem makes it helpful to deploy workflows that utilise HPC to answer questions related to system design, performance and, ultimately, operation and preparedness. Here, NCC and relevant research groups at SINTEF work together with OceanTherm to standardise workflows to realise a structured computational pipeline involving public data sources and at present three hierarchically nested computational modelling systems. All three systems rely heavily on HPC resources to produce timely and accurate results. The standardized workflow will enable documentation, reproduction, and high throughput to investigate a wide set of scenarios continuously.

Sustainable aquaculture

Company: Sustainovate

Sector: Marine biomass

Sustainovate is a European consultancy company that works with sustainable marine business development and innovation. They work with everything from software and scientific research, to introducing partners to new technologies and ideas, and offering substantive expertise in acoustics and fisheries software.

NCC has provided Sustainovate with assistance and competence transfer when it comes to analysing large amounts of data. As statistical modelling of large datasets commenced, some challenges arose, and we are still working with Sustainovate to improve the data analysis processes.

Data analysis to uncover trends in smuggling activity

Company: Norwegian Customs

Sector: Public

The Norwegian customs office keeps a careful record of goods that cross our national border. Their objective is to make it consistent, easy and efficient to follow customs rules and laws, to create a high-perceived risk of detection, and uncover activities falling outside of the rules and laws.

This use case is in its initial phase and the NCC´s role is to create proofs-of-concept for improving analysis of trends and outliers to detect anomalies and/or repetitive voids of rules with associated knowledge transfer and access to HPC resources.

The world outside our doorstep — International Collaborations

EuroHPC Joint Undertaking

The EuroHPC Joint Undertaking (JU), now comprising 32 participating states from across Europe, is continuing to put Europe at the global forefront of HPC and related technologies. In 2022 an agreement was signed for the first exascale system, Jupiter, in Europe, to arrive in 2023.

Big advances, and investments, were also made in the area of quantum computing. A second phase for the national Competence Centres was launched, providing three more years of funding for activities for the Norwegian Competence Centre headed by Sigma2.

EuroHPC JU is funded by the Horizon Europe and Digital Europe programmes, the Connecting Europe Facility and the participating states.

Norway is on the JU’s Governing Board, represented by the Research Council. Sigma2 has regularly supported the Research Council as an adviser, and as the national e-infrastructure provider, Sigma2 is responsible for implementing Norwegian participation in EuroHPC.

LUMI — Europes fastest supercomputer

The LUMI Supercomputer, in which Norway owns a stake through Sigma2, was put into complete production in 2022. LUMI is currently ranked as the most powerful high-performance computer (HPC) system in Europe and 3rd in the world.

The LUMI pre-exascale system, where Norway owns a stake through Sigma2, was put into full production in 2022 and is currently ranked as the most powerful HPC system in Europe and number 3 in the world.

Both the CPU and GPU partitions of LUMI are now in regular operation, and so far 32 Norwegian projects have been given allocations on the Norwegian part of the system. From 2022, the LUMI system is part of the regular access calls from the EuroHPC Joint Undertaking and our national calls. We have participated actively in the LUMI Consortium, providing two experts to the LUMI User Support Team (LUST), experts to the various interest groups, and one member of the Operational Management Board (OMB).

LUMI-Q: Quantum technology is coming

Quantum computing can dramatically increase the quality and impact of research and innovation efforts by enabling solutions to problems far beyond the capabilities of existing classical supercomputers. A significant global race is taking place towards such capacities, requiring not only theoretical and technical advances but also advancements in software, along with competence building and education.

Currently, Norway is involved with three partners (Sigma2, Simula Research Laboratory, and SINTEF) in LUMI-Q, one of EuroHPC’s first 6 initiatives towards building European quantum computers in connection to existing high-performance computing centres across Europe. This is a strong example of how Norway can benefit from European competence building and infrastructure access for research and innovation in quantum computing.

PRACE — at the heart of HPC in Europe

PRACE, the PaRtnership for Advanced Computation in Europe, is transforming following the new EuroHPC era. PRACE will be the basis for many consortia responding to EuroHPC calls and is seeking to become a private member of the EuroHPC Joint Undertaking. Having provided services such as training and a framework for peer review access, PRACE will continue to have a vital role in EuroHPC, being the independent voice of users and HPC centres. PRACE additionally have experience in addressing industry needs, in particular SMEs. In the EuroHPC era, there will be no HPC resources to be handed out by way of PRACE, but PRACE will represent the interest and identify the needs of users of HPC and related technologies in Europe.

PRACE IP6

The 6th and final PRACE Implementation Phase (6IP) project rounded off in late 2022, with this a long era of significant developments and contributions to the European HPC community at large. It also marks the end of PRACE 2 and funding from Horizon 2020. Norway has participated in all PRACE IP projects, from the Preparatory Implementation Phase (PRACE-PP) project in 2008, which led to the notable deployment of the Numascale system at the University of Oslo (UiO).

PRACE 6IP, looking to be the last IP project, has since the start in 2019, seen significant contributions from NRIS staff in various work packages, including lightweight virtualisation for HPC (containerised workloads), notable Best Practice Guides (BPGs) and forward-looking software solutions addressing codes capable of utilising coming exascale systems. Norway, through Sigma2, has additionally contributed to the PRACE Distributed European Computing Initiative (DECI) programme.

PRACE DECI

The PRACE DECI (Distributed European Computing Initiative) was a resource exchange programme for PRACE members which provided researchers all over Europe with access to Tier-1 (national level) supercomputing resources. DECI operated within the PRACE environment from mid-2011 until the last call, DECI-17, finished mid-2022.

NRIS provided CPU hours to DECI from 2013 through 2022. A total of 20 projects received grants, and 18 consumed 55 million CPU hours during this time. Paulo Silva's project was the largest, withdrawing 36 million CPU hours from October 2021 to February 2023 (see also the list of top 10 HPC projects above, where Silva holds 10th place).

NeIC — the Nordic e-Infrastructure Services

Development of best-in-class e-infrastructure services beyond national capabilities

Sigma2 is a partner in NeIC bringing the national e-infrastructure providers in the Nordics and Estonia together. NordForsk hosts NeIC and facilitates cooperation on research and research infrastructure across the Nordic region.

Norway presently chairs the NeIC board and from 2022, also the NeIC Provider Forum, a forum consisting of representatives of different national service providers aiming at enhancing synergies and ensuring that the NeIC activities are in line with national strategic initiatives.

Several thematic forums were established in 2022 under NeIC, especially for sharing competencies and establishing dialogue platforms for the sensitive data providers in Nordic and Baltic countries and discussing common Nordic interests towards projects related to EOSC.

The 10-year Memorandum of Understanding (MoU), underpinning NeIC will expire in 2023. Presently work is ongoing for revising and renewing the basis for the NeIC organisational structure and funding, along with a new agreement.

NeIC is based on strategic collaboration between the partner organisations CSC (Finland), SNIC (Sweden), Sigma2 (Norway), DeIC (Denmark), RH Net (Iceland) and ETAIS (Estonia).

CodeRefinery 3

The CodeRefinery project’s goal is to provide researchers with training in the necessary tools and techniques to create sustainable, modular, reusable, and reproducible software, thus CodeRefinery acts as a hub for FAIR (Findable, Accessible, Interoperable, and Reusable) software practices.

In 2022, phase 3 of CodeRefinery started. The result of this project is training events, training materials and training frameworks and a set of software development e-infrastructure solutions, coupled with necessary technical expertise, onboarding activities and best practices guides, which together form a Nordic platform. In 2022, CodeRefinery conducted training events and arranged workshops for over 500 people.

Puhuri 2

Puhuri aims to develop seamless access to the LUMI supercomputer and other e-infrastructure resources used by researchers, public administration and innovating industry. The project will continue to develop and deploy transnational services for resource allocation and tracking, as well as federated access, authorization, and group management.

The portals are integrated into the Puhuri Core system, which propagates the resource allocations and user information to the LUMI supercomputer. Usage accounting information from LUMI flows back to the allocator’s portals, providing near real-time statistics about utilisation. The LUMI resource allocators in the LUMI consortium countries started to use Puhuri Portals in 2021, and the capabilities of the portals have been greatly extended through the work of the Puhuri project in 2022.

Nordic Tier-1

In the Nordic Tier-1 (NT1), the four Nordic CERN partners collaborate through NeIC and operate a high-quality and sustainable Nordic Tier-1 service supporting the CERN Large Hadron Collider (LHC) research programme. It is an outstanding example of promoting excellence in research in the Nordic Region and globally, and it is the only distributed Tier-1 site in the world. There are 13 Tier-1 facilities in the Worldwide LHC Computing Grid (WLCG), and their purpose is to store and process the data from CERN. The Nordic Tier-1 Grid Facility has been ranked among the top three Tier-1 sites according to the availability and reliability metrics for the ATLAS and ALICE Tier-1 sites.

By combining the independent national contributions through synchronised operation, the total Nordic contribution reaches a critical mass with higher impact, saves costs, pools competencies and enables more beneficial scientific return by better serving large-scale storage and computing needs. In 2021, the focus was on NT1’s long-term sustainability to meet the challenges posed by the High-Luminosity upgrade of the LHC.

EOSC-Nordic project

The high-profile EOSC-Nordic project, coordinated by NeIC and with significant NRIS contributions, concluded in late 2022 with high marks. Ground-breaking for Nordic collaboration in a European context, the EOSC-Nordic project made significant achievements paving the way for participation in the European Open Science ecosystem and facilitating future benefits for Norwegian research activities.

The project aimed to develop and enhance the Open Science and FAIR uptake in the Nordic countries and provide access to cutting-edge digital research infrastructure services for researchers and scientists. Over the years, NRIS has increased its support for digitally distributed pan-Nordic research and data management by providing access to advanced digital research services and tools through the EOSC.

NRIS has strongly contributed to key outputs, such as showcasing the service deployment and cloud computing on the Nordic cloud resources, demonstrating integrated data management workflows in the Nordics, and designing the concept for an actionable DPM for brokering distributed resources. All deliverables are published in Zenodo as requested by the EU.

EOSC

The European Open Science Cloud´s (EOSC) ambition is to provide European researchers, innovators, companies and citizens with a federated and open multi-disciplinary environment where they can publish, find and reuse data, tools and services for research, innovation and educational purposes. EOSC supports the EU’s policy of Open Science and aims to give the EU a global leader in research data management and ensure that European scientists enjoy the full benefits of data-driven science.

Since 2017, several projects have been working to define and establish policies and infrastructure to form the EOSC. It is gradually becoming a reality for Norwegian and international users. Sigma2 and our partners through NRIS, have offered competencies and contributed to the EOSC since the first-generation projects (starting from EOSC-hub in 2018). Sigma2 supports the partnership of Norwegian institutions with the EOSC association by coordinating activities related to delivering services and infrastructure to the EOSC, possibly in synergies with activities happening in NeIC and under the EuroHPC Join Undertaking umbrella.

Skills4EOSC

With HK-Dir, the Directorate for Higher Education and Skills in Norway, Sigma2 became a partner in the Skills4EOSC project in 2022. The project joins knowledge of national, regional, institutional and thematic Open Science (OS) and Data Competence Centres from 18 European countries to unify the current training landscape into a joint and trusted pan-European ecosystem. The goal is to accelerate the upskilling of European researchers and data professionals within FAIR and Open Data, intensive-data science and Scientific Data Management.

DICE — Data Infrastructure for EOSC

In 2020 Sigma2 and several international partners submitted a proposal to the H2020 INFRAEOSC-07a2 call. The project aims at supporting the implementation of EOSC with e-infrastructure resources for data services. The proposal was granted, and the Data Infrastructure Capacity for EOSC (DICE) project started in January 2021.

DICE is enabling a European storage and data management infrastructure for EOSC, providing generic services to store, find, access, and process data consistently and persistently while enriching the services to support sensitive data and long-term preservation. Through this project, Sigma2 offers storage and sensitive data service resources to the users coming from the EOSC portal, thus supporting cross-border collaboration between Norwegian and European scientists.

In 2023 Norway will offer 50 TiB of NIRD Storage to EOSC through DICE for users that will request and be granted access to the resources through the EOSC Virtual Access mechanism.

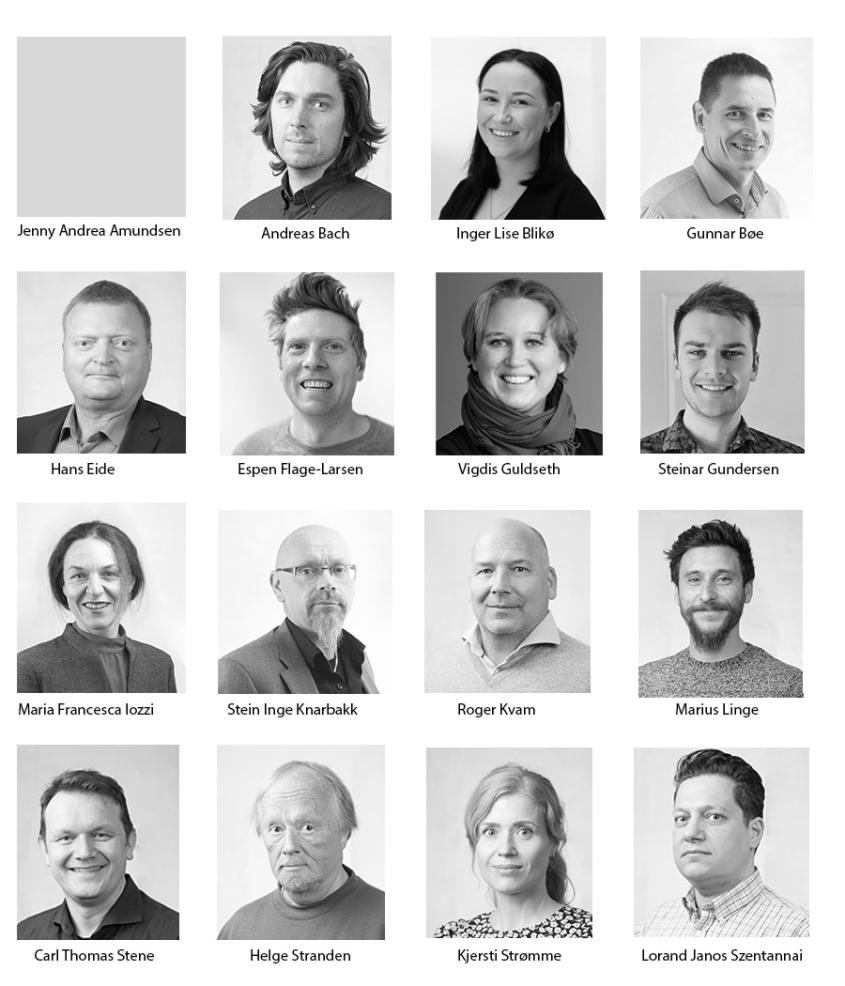

Behind the scenes — meet the team

NRIS is the name of the collaboration between the partner universities of Bergen, Oslo, Tromsø, NTNU and Sigma2. The national e-infrastructure services are delivered under the NRIS umbrella.

NRIS provides a geographically distributed competence network and ensures researchers get quick and easy access to domain-specific support.

NRIS is involved in research-oriented activities like training, advanced user support and contributing to the Technical Working Group that prepares the work for our Resource Allocation Committee.

At the end of 2022, NRIS counted around 50 members, which amounts to 37 full-time equivalents.

The Operations Organisation

The Operations Organisation (Drifsorganisasjonen - DO) is part of NRIS and delivers the operations and technical user support connected to the national services. Last year, DO focused on providing a secure, stable, and user-friendly platform for researchers to access, store, and manage their data.

Except for one big incident on Fram in July, all the systems had an uptime above 99%, which is a nice improvement from earlier years and very good when considering the complexity of large HPC systems.

In user support, a significant change was the successful switch from Request Tracker (RT) to GitLab Service Desk for handling user requests. This change resulted from having one tool to handle all tickets, incidents and requests. The staff can easily connect user tickets to internal issues and vice versa.

An important focus area for NRIS in 2022 was the implementation of FitSM, an IT service management framework. During 2022, we focused on getting scopes in place for the processes defined by FitSM and ensuring top management support. We ended the year with an excellent maturity assessment that paves the way for continuous improvements in the coming year. Big thanks to all the staff for their outstanding work operating our systems!

Sigma2

Sigma2 AS owns and has strategic responsibility for the national e-infrastructure for large-scale data and computational science in Norway.

In addition to coordinating the work in the umbrella organisation NRIS, we also manage Norway’s participation in Nordic and European e-infrastructure organisations and projects.

On 1 January 2022, Sigma2 became a subsidiary of the newly established Norwegian Agency for Shared Services in Education and Research (Sikt). We thus changed our name from UNINETT Sigma2 to Sigma2.

Our activities are jointly financed by the Research Council of Norway and the university partners (University of Bergen, Oslo, Tromsø and NTNU). Today, we have 16 employees, and our head office is in Trondheim. We strive for diversity and gender balance and work to promote equality in our organisation, committees and the Board of Directors.

The Coordination Committee

The committee´s responsibility is to ensure that the intentions and plans for the collaboration with the BOTT universities are fulfilled and implemented on time and to ensure that we have well-functioning cooperation between the partners.

- Solveig Kristensen, Dean, The Faculty of Mathematics and Natural Sciences (UiO)

- Tor Grande, Pro-Rector for Research, NTNU

- Camilla Brekke, Pro-Rector Research and Development, UiT

- Gottfried Greve, Vice-Rector for Innovation, Projects and Knowledge Clusters, UiB

- Gunnar Bøe, Managing Director, Sigma2 AS

- Gard Thomassen (Observer), Assistant Director – Research Computing, UiO

- Vigdis Guldseth, Senior Adviser, Sigma2 AS (Secretary)

The Coordination Committee was established as part of the new Collaboration Agreement signed in 2021.

The Board of Directors

The board is chaired by our parent company Sikt´s Director for the Data and Infrastructure Division and the members are from the four consortium partners, one external representative from abroad and a legal expert from a national research institute.

The Board in 2022

- Tom Røtting, (Chairman) Director for the Data and Infrastructure Division, Sikt (Board Leader)

- Kenneth Ruud, Director General, Norwegian Defence Research Establishment (FFI) / Professor UiT

- Terese Løvås, Department Leader, Department of Energy and Process Engineering, NTNU

- Ellen Munthe-Kaas, Associate Professor, Department of Informatics, UiO

- Tore Burheim, CIO/IT-Director, UiB

- Ingela Nystrøm, Professor, Uppsala University

- Øyvind Hennestad, Corporate Lawyer, SINTEF

t