This project is completed and the contract is awarded Lefdal Mine Data Centers.

For more information visit:

Background

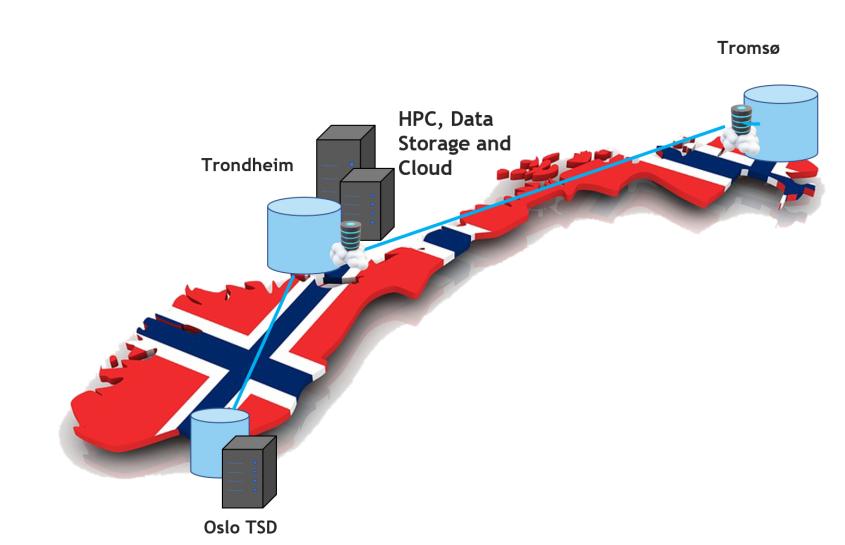

The national e-infrastructure for large-scale data- and computational science has historically been hosted at the four partner universities that are part of the collaboration with Sigma2.

Sigma2`s landscape

- Non-Sensitive Data: 3 HPC clusters on two sites + two data centres in Trondheim and Tromsø

- Sensitive Data: Sigma2 owns storage and a large part of the HPC resources in TSD – Oslo

- Uninett backbone for the connectivity between the data centres

- From 2021, the infrastructure will be connected with the pre-exascale HPC system in Finland

- Operational and Investment funding from RCN and the 4 oldest universities

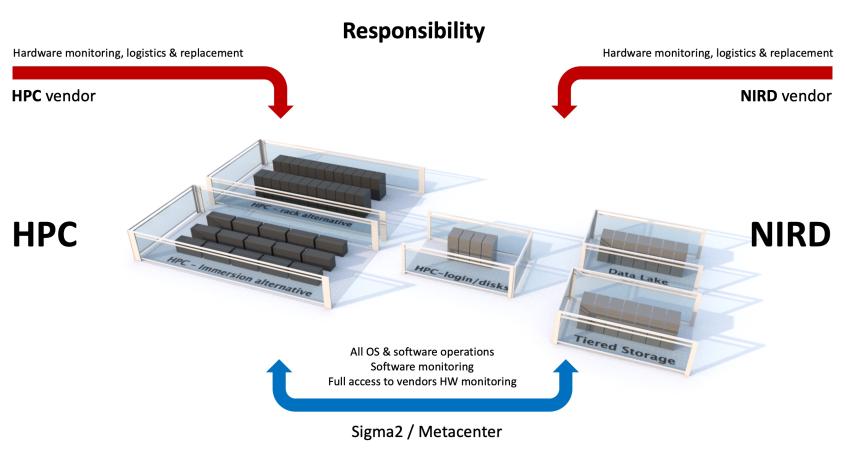

NRIS (Norwegian research infrastructure services) is an important foundation for the successful collaboration on e-infrastructure in Norway. NRIS comprises employees from the four partner universities, along with Sigma2, a collaboration aimed at consolidating competencies, resources, and services. NRIS is responsible for all OS and software operations and monitoring, with complete access to hardware monitoring provided by vendors. See illustration below.

Project objectives and desired outcome

In addition to procuring, the objective of the procurement project is to identify the requirements for a co-hosting facility, at a professional secure location that allows the national e-infrastructure to be operated sustainably and cost-effectively. The time-related objective is to be able to serve the requirements from the next generation of national storage systems (NIRD) for delivery of equipment in Q4 2021, and the expected HPC system in Q4 2022.

Project scope and exclusions

The project is targeting infrastructure within the realms of Sigma2 as the shared national resources, specifically the future national storage systems like NIRD and potential successors, backup of the future national storage systems and expected future national high-performance computing systems. Hardware operated locally at the universities for local users and existing systems are not included in the project for procuring a co-hosting location.

Project considerations

There is a desire to motivate vendors to provide a sustainable and environmentally friendly housing service while maintaining cost-effectiveness and security as required by the national e-infrastructure.

The project may favour the services that either use less energy for heat removal from the infrastructure, sell the surplus heat for a lower cost of service, or reuse the heat for societal benefits in enabling new business or innovation opportunities. How these considerations are weighted will be suggested by the project group in the offer selection matrix, according to directions provided by the Sigma2 board, and with weights according to the importance of the considerations, finally approved by the Sigma2 board.

Frequently asked questions

Q: Will Betzy or any of the current HPC systems be moved?

A: No, so far there is only new systems that will be installed at a new location,.

Q: You are going to procure colocation, a new HPC system and storage system (NIRD). Will this be 3 separate procurements?

A: Yes, HPC and NIRD will be traditionally procurements through Doffin. Colocation will not be through Doffin but by invitation.

Q: Will the colocation for HPC be based on liquid cooling?

A: HPC will be liquid-cooled. Direct cooling in high density racks up to 100 KW or Immersion cooling in tanks (also up to 100 KW).

Q: What about NIRD, will this system be liquid cooled as well?

A: We expect air-cooling for NIRD, but as an alternative, racks with chilled doors, driven by liquid.

Q: What will be the total electricity usage for the HPC system ?

A: We simply do not know yet, as the HPC procurement process will start in late 2021, but the colocation needs to provide up to 4 MW for the HPC system.

Q: What will be the total electricity usage for the storage system (NIRD) ?

A: In the first year in production, we expect like 100 KW for Tiered storage (active) and also 100 KW for Data Lake (passive).

Q: Will reuse of energy from HPC be important?

A: All efforts to reduce energy usage are important, in the form of no energy used for cooling, reuse of waste heat or a combination of these, as it is beneficial to the environment, innovation and public spending. These parameters will be considered during the procurement process.

Q: What kind of internet solution is required at the colocation

A: High-speed redundant fibre networks with 100 Gbit/s speed, and access to Uninett Research Network where most of the users are connecting from.

Q: What are the redundancy requirements when it comes to power?

A: For HPC compute nodes only, no UPS / generator support needed. For all other equipment, redundancy, equal to Uptime Institute level III.

Q: Would redundant power sources, from two different plants be interesting for you?

A: Yes, could be, for HPC computes nodes. In combination with automatic transfer switches (ATS)

Q: What are the redundancy claims when it comes to cooling?

A: For HPC compute nodes only, redundant pumps in colocations own liquid cooling loops. For all other equipment, redundancy, equal to Uptime Institute level III.

Q: You require Uptime institute Tier I for HPC, is that both cooling and electricity?

A: No, the Tier I requirements are related to cooling to void equipment overheating in case of pump failures. Redundant electricity from two providers with ATS is a plus but not required.

Q: There is a discrepancy between the table showing expected power consumption growth for NIRD 2020 and the number of racks multiplied with power consumption per rack, which Is correct?

A: The NIRD 2020 storage system are not chosen yet, and the power consumption requirements for NIRD 2020 are based on expectations and hence not accurate.