We enhance excellent research for a better world!

This year we have chosen to make a web-based report and we hope you will enjoy it. You can navigate directly to the section of your choice by clicking the links below. Or you can do what we recommend - to begin at the top and take a thorough retrospective back on 2021 with us, as you are scrolling down the page.

Managing Director's corner

We are happy to share updates about our users, our services, and collaborations below. We are also proud to present some interesting use cases from our users.

2021 was an eventful year for Sigma2. If you watch my short video, you get to know more about some of the activities that contributed to making last year a good year for the national e-infrastructure, our services and our users.

There is no doubt that research conducted via our infrastructure is beneficial – and highly relevant – to society for instance the Public Health Institute's continuous modelling of the coronavirus pandemic and the Norwegian Climate Researchers' contribution to last year's IPCC report.

Several of our projects are contributing to fulfilling relevant UN sustainability goals, and this is also something Sigma2 as a company is constantly focusing on.

Happy reading!

Press release: Projects

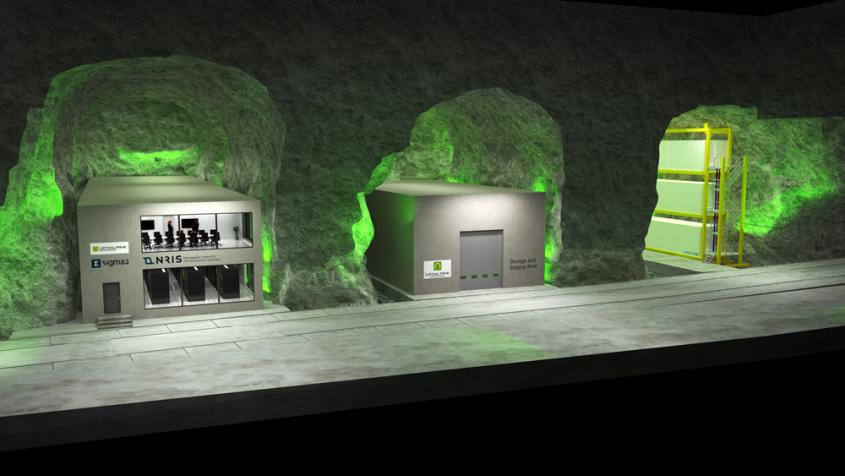

New home for the national e-infrastructure

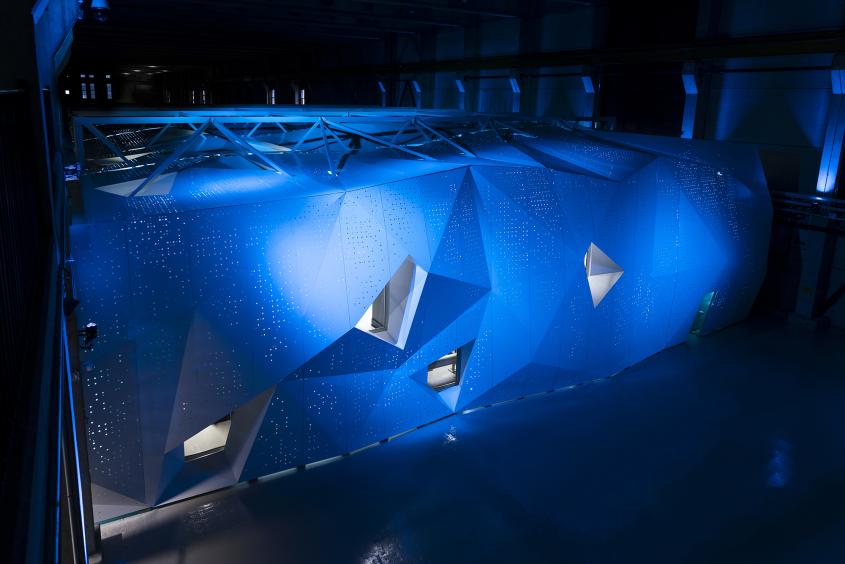

In 2021, we acquired new data centre facilities to house future national supercomputers and storage systems. In the tender competition, Lefdal Mine Datacenter received the highest score on the technical, environmental, and social assessments. LMD also won on costs and it was a big milestone for us to sign the contract. A contract that will provide us with economies of scale, access to space and flexibility in upscaling the e-infrastructure in the future.

LMD uses the old Lefdal mine facilities located between Måløy and Nordfjordeid in Vestland county. The mine, which has previously been used for the extraction of olivine, is gigantic and extends over 120,000 square meters into the mountain, spread over many levels. This provides very high general and physical security for the computer systems and data located here.

Do you want to have a look?

Who is using our services?

At the end of 2021, we had more than 2000 active users on our e-infrastructure resources. We allocated more than 1400 million CPU-hours to about 400 different research projects. More than 150 projects got nearly 15 PB in total on the national storage resources. The allocated resources equal monetary values of around 126 million NOK and almost 14 million, respectively.

Like the trend over the recent years, demand for e-infrastructure services continues to increase.

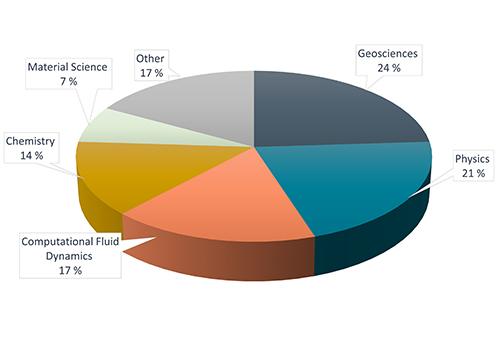

By field of research, Earth Sciences consumes the most computer time by far, followed by Physics and Climate research. Climate research tops the list when it comes to storage resources.

The number of allocations requested for both HPC and storage indicates that the demand for resources continues to increase, and the number of applications increased by almost 17 % last year.

Top 10 HPC projects

In the beginning of November 2021, this was the list of top 10 HPC projects:

| Title | Project Leader | Allocated CPU-hours |

|---|---|---|

| Solar Atmospheric Modelling | Mats Carlsson | 132 million |

| Combustion of hydrogen blends in gas turbines at reheat conditions | Andrea Gruber | 50 million |

| Bjerknes Climate Prediction Unit | Noel Keenlyside | 43.5 million |

| Quantum chemical studies of molecular properties and energetics of large and complex systems | Trygve Helgaker | 26.5 million |

| Mapping peripheral protein-membrane interactions for a better description of the protein-lipid interactome | Nathalie Reuter | 25 million |

| Heat and Mass Transfer in Turbulent Suspensions | Luca Brandt | 20 million |

| Extreme planetary interiors | Razvan Caracas | 20 million |

| Mechanical properties of nano-scale polymer particles by large-scale molecular dynamics | Zhiliang Zhang | 15 million |

| Computing for INES - Infrastructure for Norwegian Earth System modelling | Mats Bentsen | 15 million |

| SUBSLIDE | Quoc-Anh Tran | 10 million |

Top 10 storage projects

In the beginning of November 2021, this was the list of the op 10 NIRD storage projects:

| Title | Project Leader | Tebybites |

|---|---|---|

| Storage for nationally coordinated NorESM experiments | Mats Bentsen | 1790 |

| Bjerknes Climate Prediction Unit | Noel Keenlyside | 950 |

| High latitude coastal circulation modeling | Ole Nøst | 800 |

| Storage for INES - Infrastructure for Norwegian Earth System modelling | Mats Bentsen | 750 |

| Synchronisation to enhance reliability of climate prediction | Noel Keenlyside | 525 |

| European Monitoring and Evaluation Programme | Hilde Fagerli | 445 |

| Atmospheric Transport - Storage | Massimo Cassiani | 420 |

| Norwegian Earth System Grid data services | Mats Bentsen | 390 |

| Impacts of Future Sea-Ice and Snow-Cover Changes on Climate, Green Growth and Society | Noel Keenlyside | 370 |

| IMR-Internal-Storage | Jon Albretsen | 350 |

Resource allocation — who decides?

Applications for computing, storage facilities and advanced user support resources for projects are evaluated by a Resource Allocation Committee (RFK).

The RFK is composed of leading Norwegian scientists. The RFK evaluates the proposals and awards access to Sigma2 computing and storage resources twice per year. The allocation process follows a strictly regulated and defined process. Proposals for access must demonstrate scientific excellence, scientific need and that the resources requested will be used efficiently.

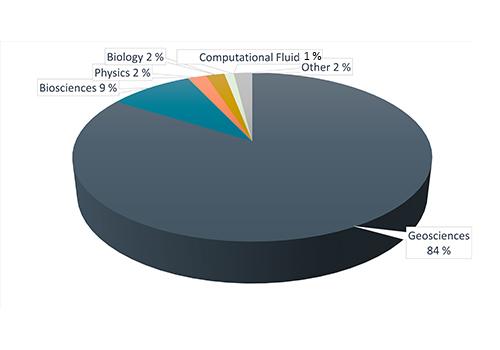

Top HPC users: field of science

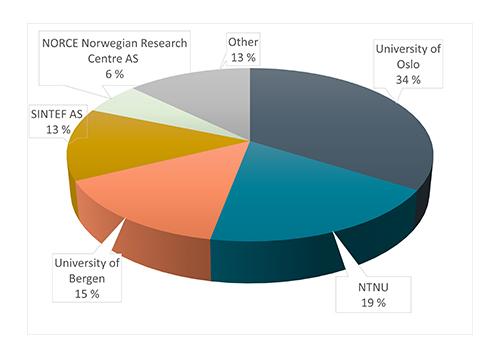

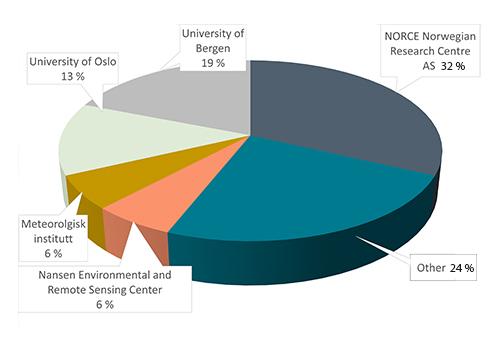

Top HPC users: institution

Top storage users: field of science

Top storage users: institution

Updates on our services

High-performace computing

The past year has been eventful for the HPC service. We had an exchange in the available national compute resources and started 2021 by welcoming users from the decommissioned systems Stallo and Vilje to the other national systems.

During the year, the demand for resources has increased. High demand for storage on Saga and Betzy justified a storage expansion from 1.1 PB to 5.2 PB and 2.5 PB to 7.7 PB on the two systems, respectively.

The Covid-pandemic had an impact on the availability of the HPC service, as the National Institute for Public Health reserved a significant part of Saga and Fram. Many Saga users were affected by this through long queue times. This motivated an acquisition of 120 additional compute nodes on Saga, increasing the machine compute capacity by 64%.

The advent of supercomputer LUMI brought on an increasing focus on GPU-based HPC-computation. This led us to acquire four GPU nodes equipped with NVIDIA A100 on Betzy.

In the autumn, although delayed, Norwegian pilot users got their first hands-on experience with LUMI.

NIRD Project Storage

Together with the national supercomputers, the national storage system NIRD forms the backbone of the national e-infrastructure for research and education in Norway.

In 2021 we procured a new storage infrastructure for Norwegian research data. Like its predecessor, the new storage system is named NIRD – the National Infrastructure for Research Data.

The current storage infrastructure is from 2017 and has reached end-of-life, as modern research generates large amounts of data and the need for corresponding storage capacity continues to increase. The 12 petabytes (PB) storage space, placed at two separate locations, in Tromsø and Trondheim respectively, are currently almost fully utilized.

The New NIRD will at the initial installation in Lefdal Mine Datacenter have a capacity of as much as 30 PiB and can in the future be expanded to circa 70 PiB if needed. It will cover current and future needs for data storage under the paradigm of Open Science and FAIR data.

The new infrastructure will consist of IBM technology and be operational from summer 2022.

easyDMP - Data Planning

Data planning is essential to all researchers who works with digital scientific data. The easyDMP tool is designed to facilitate the data management process from the initial planning phase to storing, analysing, archiving and finally sharing the research data at the end of the project. EasyDMP features 1159 users, 1181 plans from 214 organizations/departments/institutions.

EasyDMP is a step-by-step tool supporting several templates, not only template following the Horizon2020, and Science Europe recommendations but also institution-specific templates with prefilled answers and easy-to-use guides.

Since 2021, all projects applying for Sigma2’s storage resources must complement their application with a Data Management Plan.

In collaboration with the EOSC Nordic project and the Research Data Alliance, we keep on improving the tool, and in 2021 we have used the easyDMP as a testbed to design and prototype the concept of machine-actionable DMP. The experience has contributed to essential knowledge building, in a sector that is quickly evolving for the benefit of the scientific community, service providers as well as policymakers.

NIRD Service Platform

The NIRD Service Platform consists of two clusters with computing resources, orchestrated by a Kubernetes engine with a CPU/GPU architecture to enable services and computing environments to run data-intensive computing workflows, such as pre-/post- processing, visualization and AI/ML analysis. In 2021, the Service Platform faced an increase in popularity.

Due to the increasing volumes of data, it is often more convenient to analyse, pre/post-process data "locally". This means directly on the storage infrastructure without moving data elsewhere. Furthermore, the possibility to spin-off community-specific portals to expose large datasets to external users allows sharing and reuse of data in line with the current policies and best practices in research.

The NIRD Service Platform is thus a platform that complements the NIRD ecosystem and facilitates the FAIRization of the stored data. Services can run permanently or on-demand through the NIRD Toolkit. In the period between January and October 2021, between 738 and 1285 Kubernetes CPUs (lower and high limits) have been utilized on average (the physical cores are 576).

NIRD Toolkit

The NIRD Toolkit is a Research Platform to easily spin off software on-demand on the NIRD Service Platform, thus avoiding cumbersome IT operations. With the NIRD Toolkit, computations on large amounts of data using Jupyter Notebook, JupyterHub, Spark, RStudio as well as several popular artificial intelligence algorithms are possible in a few clicks.

In 2021, the authentication and authorization mechanisms have been modified to make the access and administration of the access roles easier for end-users regardless of their affiliation.

NIRD Research Data Archive

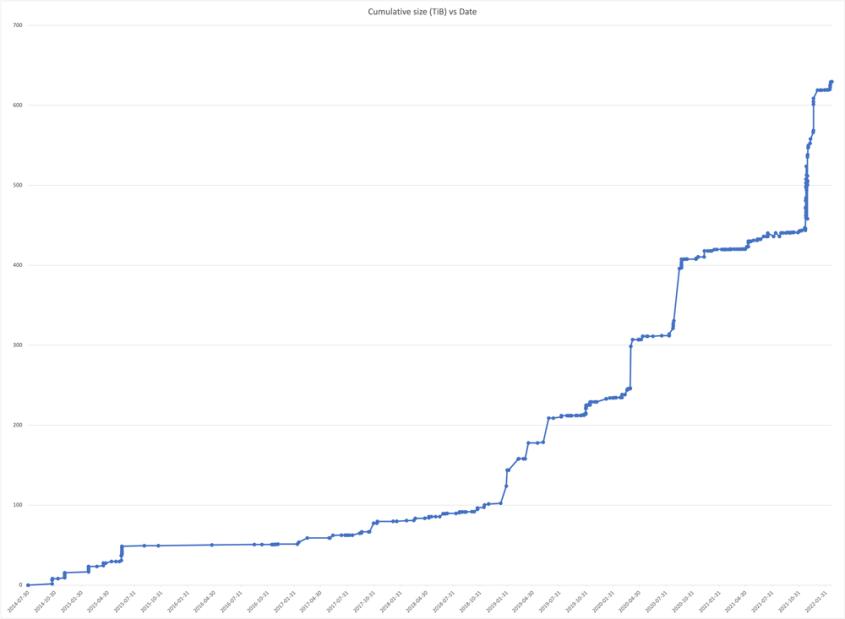

The current archive came into service in June 2014 and has since then stored 394 datasets, corresponding to over 500 TiB in total (about 1.2 PiB, including replicas).

The increasing FAIR and data management awareness of researchers has resulted in an increase in the number of datasets deposited during the last years (see the figure below). The sharp rise observed in the last part of 2021 is remarkable and probably due to many projects ending their research cycles.

By the end of 2021, the Research Archive reached its limits, and we had to temporarily close the Archive due to a capacity shortage. The operating teams are working to optimise the available capacity in order to allow more data upload, waiting for the new NIRD2020 capacity to be available (summer 2022).

Furthermore, the data landscape in Norway has changed since 2014 and the need for new and more user-friendly functionalities have risen. This is the reason why the New Data Research Archive project was launched in October 2021. This project aims to re-design the concept for archiving within the Sigma2-scope and implement the next generation Research Data Archive.

Prototyping an archiving concept that best fulfils the Open Access paradigm while still ensuring business confidentiality and personal privacy for the data that requires this, is a challenge. The design of the new research archive will be driven by the principle of minimising the overlap and enhancing the synergies and the interoperability layer with existing services in the national and international landscape. The roles of APIs and web-services for integration with other systems and data sources will be emphasized.

Sensitive Data Services (TSD)

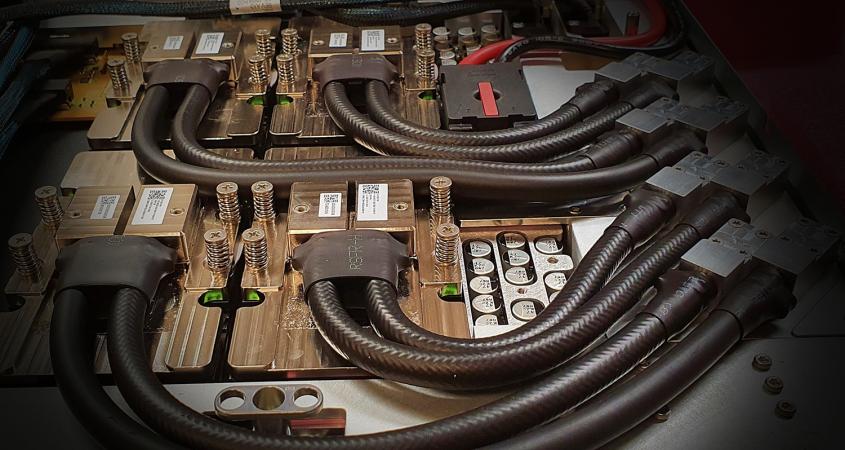

In 2021, Sigma2 has significantly increased the capacity and the capability of the Services for Sensitive Data (TSD). We have procured 4 huge memory nodes (1 TiB of RAM each) and two GPU nodes (each having 4 NVIDIA A100 graphic cards) for the Colossus cluster, to further support data-intensive computation for personalized medicine.

TSD offers a remote desktop solution for secure storage and high-performance computing on sensitive personal data. It is designed and set up to comply with the Norwegian regulations for individuals’ privacy.

TSD has been a part of the national e-infrastructure since 2018. We own 1600 cores in the HPC cluster, and this corresponds to 80% of the total HPC available to our users (not privately owned). We also own 1370 TiB of the TSD disk which delivers storage for general use or HPC use.

Basic User Support

Basic user support provides a national helpdesk that deals with user requests such as issues relating to access, systems usage or software. Support tickets are handled by highly competent technical staff.

In 2021, there was a substantial increase in the number of cases handled in our basic user support service. The number in 2021 is 6500, which is significantly higher compared to the previous years. The newly implemented requirement for DMPs and proactive follow-up of an ever-growing number of research projects and users might explain the increase in numbers.

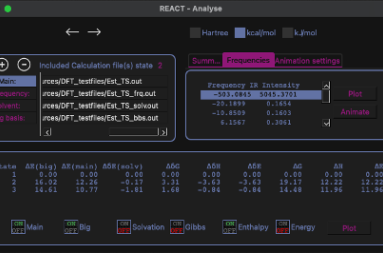

Advanced User Support (AUS)

Advanced User Support is when we really benefit from letting the experts within NRIS spend time going deeper into users’ challenges. The results are often spectacular.

During 2021, we have observed a trend that Advanced User Support enters in more ways, and in wider areas, not only as the traditional AUS service. We also see an increase in the context of the National Competence Center for HPC (NCC) aiding start-ups in implementing their innovation, supporting research infrastructures, or enabling more usage of GPU accelerators.

The arriving EuroHPC systems is another recent development in the scope of AUS. Even though it is still a bit too early to assess, we expect that AUS and extended support will be critical in assisting projects onto the systems, in particular the LUMI system. We wish to maximise the footprint of Norwegian projects on these external resources, and thereby the return from participating in the EuroHPC Joint Undertaking and EU programmes such as DIGITAL EUROPE.

Course Resources as a Service (CRaaS)

CRaaS - Course Resources as a Service intends to help researchers who are interested in providing courses or workshops with national e-infrastructure resources as a necessary tool.

With CRaaS, we make the requested CPU-hours and storage at our supercomputers available to course leaders easily and flexibly. Like one of our researchers says:

"Due to the revolution in sequencing technology, computational demands for analysing biological data have greatly increased during the last decade. For today's biology students, getting hands-on experience using an advanced computational environment like SAGA is therefore incredibly beneficial. CRaaS is a unique resource, which allows students to be thoroughly exposed to such an environment. "

Bastiaan Star, Associate Professor - Centre for Ecological and Evolutionary Synthesis, UiO

During 2021, 9 courses were completed using the Sigma2 infrastructure with a total of 346 course participants. Mostly supercomputer Saga was used, and the consumption was approximately 33 463 CPU-Hours.

Research at a glance

Assisting the industry

The EuroHPC National Competence Centre (NCC) in Norway is a partnership between NORCE, SINTEF and Sigma2. The partnership offers a novel blend of technical, administrative, domain and business development competence, available infrastructure and collaborative networks with the industry.

Building on existing successful competencies and business models, the Norwegian competence centre aims to support the Norwegian industry, the public sector and academia in using HPC services. The centre has since its inception in 2020 helped several small and medium-sized companies to adopt HPC on national infrastructure, as well as adopting machine learning in novel applications for industrial production methods.

Projects with NCC involvement

The competence centre has engaged in projects with several SMEs within diverse domains:

- AI workflow within agriculture for automatic detection of field boundaries for accurately detecting recommended seed type and quantity estimations. The NCC helps the SME to scale the workflow for expanding the business globally.

- Introducing machine learning within industrial production to help reduce scarp and production stop.

- Large scale modelling of a bubble curtain in weather models proving reduction of energy in hurricanes before field testing.

- HPC based testing environment for a digital twin of zero-emission autonomous public transportation.

- Scaling an artificial intelligence-based method of natural language document processing for ensuring building documentation can be searched and indexed.

- Scaling an electricity market modelling from current HPC use to larger HPC, increasing the resolution and moving from stochastic modelling to developed models to producing better data for decision making.

Collaboration and outreach

In addition to current ongoing projects, the competence centre has several new use cases in the pipeline, and the current use cases will be published as success stories for increasing the outreach and increased awareness about the benefits of adapting HPC in an industrial business process.

The NCC has during 2021 established collaboration with AI hubs, upcoming EDIHs and industry hubs like NORA, Digital Norway, Datafabrikken and Toppindustrisenteret. The collaborations have increased the competence centre's outreach as well as producing some of the use cases and the new webinar series arranged by the NCC.

DigiFarm is one of Norway's leading agri-tech startups specializing in the development of deep neural networks for automatic detection of field boundaries and sown areas based on high-resolution satellite data. Read about how they got help from NCC.

Collaboration across the borders

Norwegian researchers can get access to services, including compute cycles on very large systems, through Sigma2’s partnership in international collaborations. This is especially interesting for researchers that otherwise need capabilities not available at the national level, for example, due to the size of the system, or a technology-specific solution. Sigma2 participates in several international initiatives and collaborations in the field of high-performance computing and data infrastructure.

EuroHPC

The EuroHPC Joint Undertaking (JU) continuation under Horizon Europe (HE) and the Digital Europe programmes (DIGITAL), along with Norway’s participation in these programmes, has allowed Norway to continue in EuroHPC as a participating state. Norway is in the JU Governing Board represented by the Research Council. Sigma2 has regularly supported Research Council as advisors, and as the national e-infrastructure provider, Sigma2 is responsible for implementing the Norwegian participation in EuroHPC. This arrangement has been working very well, in large thanks to a very good collaboration with the Research Council and Sigma2’s experience through participating in PRACE and other European projects for many years.

The EuroHPC JU co-funds the national EuroHPC Competence Centers and the supporting CASTIEL project to support the centres and the interaction between them towards the common goals of EuroHPC. Sigma2 implements the Norwegian EuroHPC Competence Center in partnership with SINTEF and NORCE.

According to the plans drawn up by the EuroHPC JU, there is a clear ambition of establishing exascale capabilities in Europe by 2023. In parallel with this, the ambition is to increase the computing capacity in lower tiers, and by upgrading existing systems, such as LUMI. From the end of 2021, there are regular calls for access to EuroHPC systems, including Norwegian projects.

PRACE

PRACE is nearing the end of its 6th Implementation Phase (6IP) project, which will last formally to the end of PRACE2 and Horizon 2020. An extension of 6IP to the end of June 2022 was recently negotiated, drawing on unspent funds and bridging to new calls from EuroHPC under the JU’s 2022-2023 Work Programme. The PRACE 6IP project has since the start in 2019 seen significant contributions from NRIS staff in various work packages, including lightweight virtualization for HPC (containerized workloads), notable Best Practice Guides (BPGs) and forward-looking software solutions addressing codes capable of utilizing coming exascale systems. Norway, through Sigma2, has additionally contributed to the PRACE Distributed European Computing Initiative (DECI) programme.

Presently, discussions are on-going about the future of PRACE in the EuroHPC era. It is likely that PRACE will have an important role in EuroHPC, providing a framework and resources for peer reviewed access, training and high-level user support. PRACE additionally has experience in addressing industry needs, in particular SMEs, through the SHAPE programme. In the EuroHPC era there seems to be little motivation for PRACE hosting members to provide capacity from their non-EuroHPC systems.

The LUMI supercomputer

The LUMI consortium, where Norway is a partner through Sigma2, has been working well throughout 2021. Although there are delays in the delivery of the GPU partition of the system due to the pandemic and the global supply situation, the system will be the first EuroHPC pre-exascale system to go into production.

At present, the installation is expected to complete by end of the second quarter of 2022. The CPU partition of LUMI has been in regular operation since November 2021, and so far, four Norwegian projects have been given allocations on the Norwegian national part of the system. Starting 2022 the LUMI system is part of the regular access calls from the JU, and also the Sigma2 national call from the allocation period 2022.1. Sigma2 has participated actively in the LUMI Consortium, providing two experts to the LUMI User Support Team (LUST), experts to the various interest groups, and has a member on the Operational Management Board (OMB).

Pilots

Projects from each member country participated in the first pilot phase in the autumn of 2021. From Norway, the first two pilots who got to test the new supercomputer were projects from the Rosseland Center and the NorESM community. Later, two more projects from the University of Bergen and SINTEF were also invited to test.

Stats

At the time of installation, LUMI will be one of the world’s fastest computer systems, having theoretical computing power of more than 550 petaflops which means 550 quintillion calculations per second.

LUMI’s performance will be sevenfold compared to one Europe’s fastest supercomputer today (Juwels, Forschungszentrum Jülich, Germany).

LUMI will also be one of the world’s leading platforms for artificial intelligence.

Illustrations by CSC.

NeIC

Sigma2 is a partner in NeIC – the Nordic e-Infrastructure Collaboration – bringing the national

e-infrastructure providers in the Nordics and Estonia together.

Hosted by NordForsk, which facilitates cooperation on research and research infrastructure across the Nordic region, NeIC is based on strategic collaboration between the partner organizations, CSC (Finland), SNIC (Sweden), Sigma2 (Norway), DeIC (Denmark), RH Net (Iceland) and ETAIS (Estonia).

CodeRefinery

The goal of the CodeRefinery project is to provide researchers with training in the necessary tools and techniques to create sustainable, modular, reusable, and reproducible software. The result of this project is training events, training materials and training frameworks, as well as a set of software development e-infrastructure solutions, coupled with necessary technical expertise, on-boarding activities and best practices guides, which together form a Nordic platform.

In 2021, CodeRefinery conducted training events and arranged workshops for over 400 people.

Phase two of CodeRefinery was concluded in 2021, and a third phase will start in 2022.

Puhuri

Puhuri aims for developing seamless access to the EuroHPC LUMI supercomputer, as well as to other e-infrastructure resources used by researchers, public administration and innovating industry. The project will develop and deploy trans-national services for resource allocation and tracking, as well as federated access, authorization, and group management.

In 2021, the LUMI resource allocators in the LUMI consortium countries started to use Puhuri Portals. The portals are integrated into the Puhuri Core system, which propagates the resource allocations and user information to the LUMI EuroHPC supercomputer. Usage accounting information from LUMI flows back to the allocator’s portals, providing near real time statistics about utilization.

NICEST2

The second phase of the Nordic Collaboration on e-infrastructures for Earth System Modeling, NICEST2, focuses on strengthening the Nordic position within climate modeling by leveraging, reinforcing and complementing ongoing initiatives. It builds on previous efforts within NICEST and NordicESM and planned activities include enhancing the performance of and optimizing workflows used in climate models so that these can run in an efficient way on future computing resources (like the EuroHPC LUMI system); widening the usage of and expertise on evaluating Earth System Models and developing new diagnostic modules for the Nordic region within the ESMValTool; creating a roadmap for FAIRification of Nordic climate model data.

Nordic Tier-1

In the Nordic Tier-1 (NT1) facility, the four Nordic CERN partners collaborate through NeIC and operates a high-quality and sustainable Nordic Tier-1 service supporting the Large Hadron Collider (LHC) research programme. The main objective of NT1 is to deliver continuously sufficient production resources towards the Worldwide LHC Computing Grid (WLCG) – the large international e-infrastructure built to provide computing and storage for CERN until the agreed end, which according to the WLCG Memorandums of Understanding is LHC lifetime plus 15 years.

By combining the independent national contributions through synchronised operation, the total Nordic contribution reaches a critical mass with higher impact, saves costs, pools competences and enables more beneficial scientific return by better serving large-scale storage and computing needs. In 2021, the focus was on NT1’s long-term sustainability in order to meet the challenges posed by the High-Luminosity upgrade of the LHC.

PaRI

The focus of the Nordic Pandemic Research Infrastructure (PaRI) project has been on the collection and use of data in connection with research on COVID-19 and other pandemics. By embracing and aligning with other European COVID-19 initiatives, the project facilitated COVID-19 and other pandemic research for Nordic researchers.

The project was concluded on 31 October 2021.

EOSC

Since the formation of the European Open Science Cloud (EOSC) was started under the Horizon 2020 programme, implementation has moved forward through a series of calls, among these the one that funded the EOSC-Nordic project where Sigma2 participates. The EOSC Association was established in 2021, and EOSC is set to continue as a key element under Horizon Europe (HE) and Digital Europe programmes. Sikt, Sigma2’s owner institution, has become a member of the association, and Sigma2 has participated in several initiatives towards the HE calls for EOSC and will participate in projects that come out of these, mainly projects leveraging Sigma2 e-infrastructure and competence resources to implement services for end-users. Staff from Sigma2 have participated in EOSC Working Groups (WGs) and in forums and task forces related to the formation and activities of EOSC. It is expected that these activities will continue and expand going forward. Below are highlights with brief descriptions of Sigma2 activities related to EOSC in 2021.

EOSC Nordic

EOSC Nordic is focusing on the implementation of the EOSC on a regional level. The activities include promoting open science policies for cross-border research, provisioning of services and support for the implementation of FAIR principles. Sigma2 is responsible for one of the work packages, whose goal is to pilot services in the Nordic region that in the future can scale-out in the EOSC. Proof of concept has been demonstrated for cross-border processing for cloud and portal use cases and is in progress for sensitive data use cases. We also actively contribute to mapping policy for open data in the country and the Nordic region.

DICE

In 2020 Sigma2 together with several international partners submitted a proposal to the H2020 INFRAEOSC-07a2 call. The project aims at supporting the implementation of EOSC with e-infrastructure resources for data services. The proposal was granted, and the Data Infrastructure Capacity for EOSC (DICE) project started in January 2021. Through this project, Sigma2 offers storage and sensitive data service resources to the users coming from the EOSC portal, thus supporting cross-borders collaboration between Norwegian and Europeans scientists.

Skills4EOSC

At the very end of 2021, Sigma2 was notified that the consortium behind the Skills4EOSC proposal had been awarded funding from HORIZON-INFRA-2021-EOSC-01. Additional partner in the consortium from Norway is HK-Dir. As the agreement with the EC has not yet been signed and the project not set to start before after summer 2022, we eagerly look forward to reporting on the activities and achievements of this project in next year’s report.

Who we are?

Sigma2

Sigma2 has the strategic responsibility for and manages the national e-infrastructure for large-scale data and computational science in Norway. We provide services for high-performance computing and data storage to individuals and groups involved in research and education at all Norwegian universities and colleges, and other publicly funded organisations and projects. We also coordinate Norway´s participation in Nordic and European e-infrastructure organisations and projects. Our activities are jointly financed by the Research Council of Norway and the Sigma2 consortium partners which are the universities in Bergen, Oslo, Tromsø and NTNU.

NRIS - Norwegian research infrastructure services

An important foundation for the successful collaboration on e-infrastructure in Norway is NRIS - a collaboration between Sigma2 and the Universities of Bergen, Oslo, Tromsø and NTNU to pool competencies, resources and services.

NRIS consists of highly qualified IT staff at the four universities and Sigma2, counting nearly 60 members at the end of 2021, amounting to 34 full time equivalents.

The purpose of NRIS is a geographically distributed competence network that ensures that all researchers who use the national e-infrastructure get quick and easy access to domain-specific support close to the user.

NRIS staff is involved in research-oriented activities like training, advanced user support and contributing to the Technical Working Group that prepares the work for the RFK (Resource Allocation Committee) before each resource allocation period.

Looking back on 2021 with NRIS

One major change last year was changing the name of the collaboration from "Metacenter" to NRIS - Norwegian research infrastructure services.

Do you want to know more about how this came about and other highlights? Join Gunnar Bøe and Jon Kerr Nilsen, one of the leaders of NRIS´ Operation Organisation, when they take a good look back on 2021 and the work done in NRIS.

The Board of Directors

The board is chaired by our parent company Sikt´s Director for the Data and Infrastructure Division and the members are from the four consortium partners, one external representative from abroad and a legal expert from a national research institute.

The Board in 2021:

- Tom Røtting, (Chairman) Director for the Data and Infrastructure Division, Sikt (Board Leader)

- Kenneth Ruud, Director General, Norwegian Defence Research Establishment (FFI) / Professor UiT

- Terese Løvås, Department Leader, Department of Energy and Process Engineering, NTNU

- Ellen Munthe-Kaas, Associate Professor, Department of Informatics, UiO

- Tore Burheim, IT Director, UiB

- Ingela Nystrøm, Professor, Uppsala University

- Øyvind Hennestad, Corporate Lawyer, SINTEF

The Coordination Committee

The Coordination Committee was established in 2021, as part of the new Collaboration Agreement. The committee´s responsibility is to ensure that the intentions and plans for the collaboration with the BOTT universities are fulfilled and implemented on time and to ensure that we have a well-functioning collaboration between the partners.

- Solveig Kristensen, Dean, The Faculty of Mathematics and Natural Sciences (UiO)

- Tor Grande, Pro-Rector for Research, NTNU

- Camilla Brekke, Pro-Rector Research and Development, UiT

- Gottfried Greve, Vice-Rector for Innovation, Projects and Knowledge Clusters, UiB

- Gunnar Bøe, Managing Director, Sigma2 AS

- Gard Thomassen (Observer), Assistant Director – Research Computing, UiO

- Vigdis Guldseth, Senior Adviser, Sigma2 AS (Secretary)